Sex, Gender, & Intersectional Analysis

Case Studies

- Science

- Health & Medicine

- Chronic Pain

- Colorectal Cancer

- Covid-19

- De-Gendering the Knee

- Dietary Assessment Method

- Gendered-Related Variables

- Heart Disease in Diverse Populations

- Medical Technology

- Nanomedicine

- Nanotechnology-Based Screening for HPV

- Nutrigenomics

- Osteoporosis Research in Men

- Prescription Drugs

- Systems Biology

- Engineering

- Assistive Technologies for the Elderly

- Domestic Robots

- Extended Virtual Reality

- Facial Recognition

- Gendering Social Robots

- Haptic Technology

- HIV Microbicides

- Inclusive Crash Test Dummies

- Human Thorax Model

- Machine Learning

- Machine Translation

- Making Machines Talk

- Video Games

- Virtual Assistants and Chatbots

- Environment

Computer Science Curriculum: Intersectional Approaches

The Challenge

Computer science (CS) education often hones mathematical and engineering skills, while considering moral, social, and political reasoning beyond its scope. As we have seen in recent years, this can result in programs that amplify social inequities. Google Translate, for example, often defaults to the masculine pronoun when translating news articles from Spanish to English, thereby reinforcing the notion that primarily men are active intellectuals. Similarly, word embedding characterizes typical European American names as pleasant and names associated with African Americans as unpleasant—again exacerbating social biases (Zou & Schiebinger, 2018). Computer science courses that focus solely on technical programming and mathematical approaches fail to prepare students to understand how computing influences legal, governmental, economic, and cultural systems (Ko et al., 2020). Embedding intersectional analysis in core CS courses can sharpen students’ critical skills to recognize systemic injustices perpetrated by technology—and better prepare the scientific workforce for the future.

Method: Intersectional Approaches

Rethinking concepts such as “technical,” “engineering, and “programming” can help students recognize that moral, social, and political issues raised by computing technologies are part of computer science and deserve their attention. Computing decisions are value-laden and have impacts on different social groups. This is true whether or not researchers recognize those impacts. When current values are recognized, researchers and students have the opportunity to reflect on them, challenge them, and transform them.

Gendered Innovations:

1. Remaking the Computing Research Ecosystem:

Responsible computing has become a priority in the European Union, the U.S., and elsewhere. A responsible computing ecosystem can be encouraged by integrating intersectional analyses into funding applications, peer-review processes, and company audits, as well as by incentivizing cross-disciplinary partnerships between technologists, humanists, and social scientists.

2. Emerging CS Courses:

Since 2017, universities have been developing “Embedded EthiCS” that integrate intersectional sociocultural analysis into core CS courses. This case study highlights some of these emerging programs.

3. Inclusive Language and Visualization in Course Content

Both industry and governments have a role to play in supporting the transition to sustainable fashion. Industries, particularly investment companies, can analyze environmental, social, and governance (ESG) factors to measure sustainability and ethical impacts before investing in a specific company—and rebalance their portfolio towards companies with high ESG scores.

Intersectional Innovation 1: Remaking the Computing Research Ecosystem

Intersectional Innovation 2: Emerging CS Courses

Intersectional Innovation 3: Inclusive Language and Visualization in Course Content

Next Steps

The Challenge

Computer science (CS) education often hones mathematical and engineering skills, while considering moral, social, and political reasoning beyond its scope. As we have seen in recent years, this can result in programs that amplify social inequities. Google Translate, for example, often defaults to the masculine pronoun when translating news articles from Spanish to English, thereby reinforcing the notion that primarily men are active intellectuals. Similarly, word embedding characterizes typical European American names as pleasant and names associated with African Americans as unpleasant—again exacerbating social biases (Zou & Schiebinger, 2018). Computer science courses that focus solely on technical programming and mathematical approaches fail to prepare students to understand how computing influences legal, governmental, economic, and cultural systems (Ko et al., 2020). Embedding intersectional analysis in core CS courses can sharpen students’ critical skills to recognize systemic injustices perpetrated by technology—and better prepare the scientific workforce for the future.

Intersectional Innovation 1: Remaking the Computing Research Ecosystem

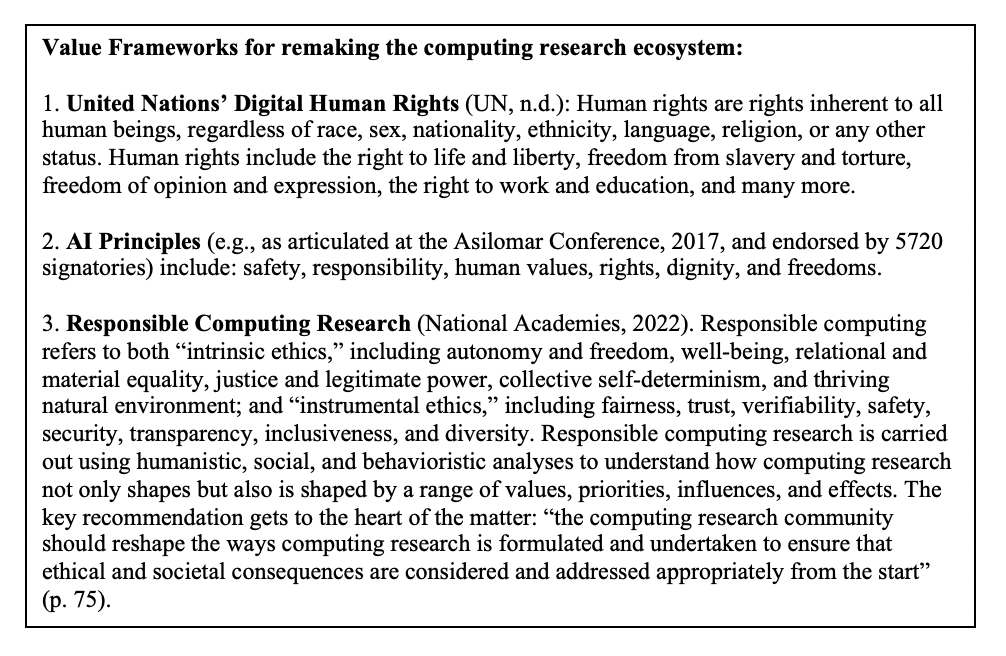

Fostering responsible computing has become a priority in the European Union, the U.S., and elsewhere (EU, 2019). The U.S. National Academies emphasize that “with computing technologies increasingly woven into our society and infrastructure, it is vital for the computing research community to be able to address the ethical and societal challenges that can arise from the development of these technologies, from the erosion of personal privacy to the spread of false information” (National Academies, 2022). Computer researchers need to anticipate social risks from the very beginning. Failure to consider the social, political, and economic dimensions early in research can lead to harm.

-

1) Research funders can reconfigure granting processes to support interdisciplinary work between technologists and humanists/social scientists—disciplines often siloed in different branches of funding agencies. Funding can incentivize new research partnerships (National Academies, 2022). Application evaluation needs to consider both the technical excellence and social benefits of a proposal, with special attention to gender, race, and intersectional social analysis. For example, the Human-Centered AI Institute at Stanford University requires review of research proposals by both a panel of technologists and a panel of humanists and social scientists. By putting these policies into place, research agencies help cultivate research that enhances social benefits and minimizes social harms across the whole of society.

2) Editorial boards of peer-reviewed journals and conferences can further support these efforts by requiring sophisticated critical sex, gender, race, intersectional, and social analysis when selecting papers for publication. The NeurIPS (Neural Information Processing Systems) conference, for example, conducts ethical reviews before accepting papers (Bengio et al., 2021). Journals such as Nature and The Lancet require sex and gender analysis, where relevant (Gendered Innovations, 2022).

3) Universities and research institutions can integrate knowledge of sex, gender, race, and broader intersectional social analysis into core engineering, design, and computer science curricula. Many universities host stand-alone courses on these topics in the humanities and social sciences. These are important to prepare students in those fields for collaboration, but we also need critical social analysis embedded in core courses in the natural sciences, CS, medicine, and engineering.

4) Industry. Numerous companies have promoted AI Principles similar to those articulated at the Asilomar Conference in 2017 (Future of Life Institute, 2017). It will be important to survey and audit company principles and policies for gender, race, and intersectional analysis. Industry can facilitate achieving these AI Principles by hiring employees trained to work in interdisciplinary teams that include technologists, humanists, and social scientists, and who have cultivated skills to evaluate the potential social benefits and harms of their products, services, and infrastructures.

This case study focuses on universities training, as universities train the workforce for the future. Critical intersectional social analysis must be part of core natural science and engineering courses. Efforts have been made in medicine—the Charité University Hospital in Berlin, Germany, for instance, has successfully integrated sex and gender analysis throughout all six years of medical training from early basic science to later clinical modules (Ludwig et al., 2015). (These are not materials on professional ethics; they are on sex/gender analysis in health and medicine.) However, this is a rare example, and universities must do more to prepare the scientific workforce for the future.

Intersectional Innovation 2: Emerging CS Courses

Since 2017, universities have been developing what was first called at Harvard University “Embedded EthiCS” that integrates ethical reasoning into core CS courses (Grosz et al., 2019; Garrett et al., 2020). These initiatives are also referred to as “responsible computing,” among a variety of other names. We recommend embedding intersectional sociocultural analysis that draws skills broadly from the humanities and social sciences. We highlight some emerging programs:

-

● Embedded EthiCS (Harvard University): Beginning in 2017, Professor Barbara Grosz and her CS team developed a collaboration with Professor Alison Simmons and the Philosophy department to teach CS students how to consider the ethical and social implications of their work. Embedded EthiCS introduces ethics directly into standard CS curriculum (Grosz et al., 2019). Watch their video.

Embedded EthiCS teaches concepts such as “responsibility” applied to climate change, cloud security, performance versus correctness in system design; “rights” in relation to software verification and validation, electronic privacy and big data systems, tracking censorship; “fairness” in algorithmic fairness and recidivism prediction, discrimination and machine learning; “equality” applied to ASCII, Unicode, and the ethics of natural language representation; “discrimination” related to bias and stereotypes in word-embedding software, and more (see image). Ethics modules are available here.

-

● Embedded EthiCS (Stanford University): In 2020, Stanford initiated an Embedded EthiCS program that incorporates ethics-focused lectures, assignments, and assessments in core CS courses. The program emerged from a collaboration between the Computer Science Department, the McCoy Family Center for Ethics in Society, and the Institute for Human-Centered Artificial Intelligence (Miller, 2020).

The goal is to develop ethics materials that relate directly to the technical course content. For example, an intro-level programming course incorporates a lecture and assignment that address the problem of bias in data sets that may lead to representational and allocation harms. Another introductory course discusses the ethics of using data for priority queues through the example of housing allocation in Los Angeles. Further, a course on algorithms discusses the potential impact of formalizing real-world problems to make them algorithmically tractable, including how foregrounding an optimization function may render other important values invisible.

-

● The Mozilla Teaching Responsible Computing Playbook outlines specific ways to foster curriculum that is successful, institutionally sustainable, and useful for students entering the workforce. Responsible computing is loosely defined as “designing computing artifacts that need to take society into consideration.” Mozilla emphasizes the need for collaboration across disciplines: “Philosophers ask about the nature of privacy and why we value it; political scientists ask how technology influences political systems; critical race theorists investigate how technology reproduces and reinforces institutional racism; psychologists consider how technology shapes the way we think and behave; disability studies analyze how disabled users ‘hack’ existing technology to make it work them. For these reasons, bringing the perspectives, concepts, and tools from other disciplines into conversation with responsible computing efforts can strongly enhance both teaching and research” (Little et al., 2021).

- ● The Impossible Project and Teaching Responsible Computing (University of Buffalo) includes a redesigned first year seminar that features coursework (lecture slides, assignments, recitation activities) specifically on critical approaches to computing. The course culminates in a competition where students pitch solutions based on a theme (e.g., antiracist computing or bias in data driven criminal risk assessment).

- ● Responsible Computing Fellows (University of Colorado Boulder): undergraduate students across campus apply for a fellowship program that involves seminar-style discussions on current events and ethical issues in technology. Students also participate in events with guest speakers and have a community of peers to discuss issues related to responsible computing, employment, coursework, and more.

- ● Responsible Problem Solving (Brown University, Haverford College, University of Utah) includes slides, assignments, instructor notes, and sample datasets for topics in data structures and algorithms related to responsible computing (Cohen et al., 2021).

- ● Embedding Ethics in CS Classes through Role Play (Georgia Tech) includes course components with written scenarios, role descriptions, supplemental readings, and teaching guides for activities related to autonomous buses and AI applications in university admissions.

- ● The Social and Ethical Responsibilities of Computing (MIT) is facilitating the development of responsible “habits of mind and action” for those who create and deploy computing technologies.

- ● Embedded Ethics (Georgetown) pairs Ethics Lab philosophers and designers with faculty across disciplines to co-design ethical discussions and run customized, creative exercises in their coursework. Students are exposed to key ethical concepts and frameworks and provided the opportunity to debate, apply, and internalize context-specific lessons in order to develop essential skills and gain a real-world understanding of their potential impacts. A partnership with the Department of Computer Science was initiated in 2019.

- ● Computing Narratives: Website with a variety of narratives that can support incorporating ethical content into computer science classes.

Method: Intersectional Approaches

Rethinking concepts such as “technical,” “engineering,” and “programming” can help students recognize that moral, social, and political issues raised by computing technologies are part of computer science and deserve their attention. Computing decisions are value-laden and have impacts on different social groups. This is true whether or not researchers recognize those impacts. When current values are recognized, researchers and students have the opportunity to reflect on them, challenge them, and transform them.

Intersectional Innovation 3: Inclusive Language and Visualization in Course Content

Concerted efforts have been made to make engineering and CS more appealing to traditionally underrepresented groups. A key approach has focused on breaking down stereotypes (National Academy of Engineering, 2008).

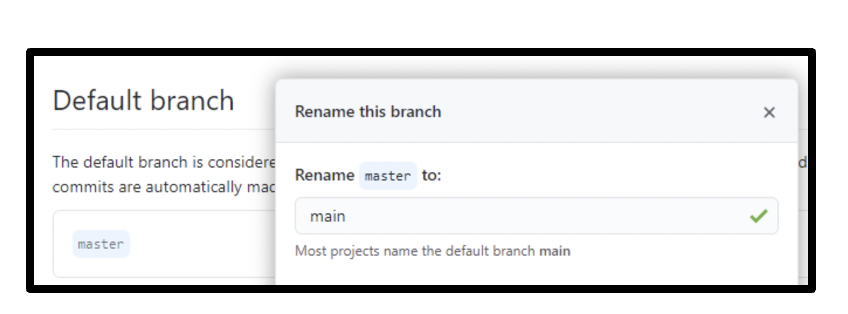

In addition to increasing the numbers of underrepresented groups, it is important to also change the content and methods of teaching and interacting within the classroom. Many concepts in computer science rely on language that reinforce historical inequities. Terms like “master” and “slaves” to describe server architecture in a distributed system (fig. 1) or using “so easy your mom can do it!” as a benchmark for user experiences are a few examples.

Figure 1: GitHub is a code hosting service for software development that provides version control. Historically, the first branch for a new code repository was named the “master” branch. However, conversations within the Git project and the Software Freedom Conservancy led to changes within GitHub to rename the default branch of the repository “main.”

Similarly, many benchmark images, such as Lena, a standard test used image in the field of computer vision since 1973, are problematic. Lena is a picture of the Swedish model Lena Forsén cropped from the centerfold of the November 1972 issue of Playboy magazine. Not only is the image sexist, but it sets “white” as the standard for image processing, which has led to many problems in facial recognition and other processes and programs (Buolamwini & Gebru, 2018). Rethinking language and visual representation serves as a relatively simple intervention to begin to challenge inequitable norms in the classroom.

Method: Rethinking Language and Visual Representations

Rethinking language and visual representations can remove assumptions that may limit innovation and discovery. Using inclusive language in the classroom has the potential to help make students from diverse backgrounds feel more comfortable and valued.

Next Steps

- 1. Evaluate Embedded EthiCS and responsible computing courses. More research is needed on how to effectively evaluate courses that embed sophisticated intersectional social analysis (Horton et al., 2022).

- 2. Build embedded ethics or responsible computing into the CS major.

Works Cited

AI Principles. (2017). Future of Life Institute. Retrieved October 18, 2022, from https://futureoflife.org/open-letter/ai-principles/

Bengio, S., Beygelzimer, A., Crawford, K., Fromer, J., Gabriel, I., Lewandowski, A., Raji, D., & Ranzato, M. (2021, August 23). NeurIPS 2021 Ethics Guidelines. Neural Information Processing Systems Blog. https://blog.neurips.cc/2021/08/23/neurips-2021-ethics-guidelines

Buolamwini, J., & Gebru, T. (2018). Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification. Proceedings of the 1st Conference on Fairness, Accountability and Transparency, 77–91. https://proceedings.mlr.press/v81/buolamwini18a.html

Cohen, L., Precel, H., Triedman, H., & Fisler, K. (2021). A New Model for Weaving Responsible Computing Into Courses Across the CS Curriculum. Proceedings of the 52nd ACM Technical Symposium on Computer Science Education, 858–864. https://doi.org/10.1145/3408877.3432456

European Union. (2019). EU guidelines on ethics in artificial intelligence: Context and implementation (European Parliament). https://www.europarl.europa.eu/RegData/etudes/BRIE/2019/640163/EPRS_BRI(2019)640163_EN.pdf

Fiesler, C., Garrett, N., & Beard, N. (2020). What Do We Teach When We Teach Tech Ethics? A Syllabi Analysis. Proceedings of the 51st ACM Technical Symposium on Computer Science Education, 289–295. https://doi.org/10.1145/3328778.3366825

Garrett, N., Beard, N., & Fiesler, C. (2020). More Than “If Time Allows”: The Role of Ethics in AI Education. Proceedings of the AAAI/ACM Conference on AI, Ethics, and Society, 272–278. https://doi.org/10.1145/3375627.3375868

Gendered Innovations. (2022). Sex and Gender Analysis Policies of Peer-Reviewed Journals.

Grosz, B. J., Grant, D. G., Vredenburgh, K., Behrends, J., Hu, L., Simmons, A., & Waldo, J. (2019). Embedded EthiCS: Integrating ethics across CS education. Communications of the ACM, 62(8), 54–61. https://doi.org/10.1145/3330794">https://doi.org/10.1145/3330794">https://doi.org/10.1145/3330794

Horton, D., McIlraith, S. A., Wang, N., Majedi, M., McClure, E., & Wald, B. (2022). Embedding Ethics in Computer Science Courses: Does it Work? Proceedings of the 53rd ACM Technical Symposium on Computer Science Education V. 1, 481–487. https://doi.org/10.1145/3478431.3499407

Ko, A. J., Oleson, A., Ryan, N., Register, Y., Xie, B., Tari, M., Davidson, M., Druga, S., & Loksa, D. (2020). It is time for more critical CS education. Communications of the ACM, 63(11), 31–33. https://doi.org/10.1145/3424000

Little, M., Patterson, A., & Ricks, V. (2021). Working Across Disciplines (Teaching Responsible Computing Playbook). Mozilla Responsible Computer Science Challenge. https://foundation.mozilla.org/en/what-we-fund/awards/teaching-responsible-computing-playbook/topics/working-across-disciplines/

Ludwig, S., Oertelt-Prigione, S., Kurmeyer, C., Gross, M., Grüters-Kieslich, A., Regitz-Zagrosek, V., & Peters, H. (2015). A Successful Strategy to Integrate Sex and Gender Medicine into a Newly Developed Medical Curriculum. Journal of Women’s Health, 24(12), 996–1005. https://doi.org/10.1089/jwh.2015.5249

Miller, K. (2020, October 5). Building an Ethical Computational Mindset. Stanford Human-Centered Artificial Intelligence. https://hai.stanford.edu/news/building-ethical-computational-mindset

National Academies of Sciences, Engineering, and Medicine. (2022). Fostering Responsible Computing Research: Foundations and Practices. The National Academies Press. https://doi.org/10.17226/26507

National Academy of Engineering. (2008). Changing the Conversation: Messages for Improving Public Understanding of Engineering. The National Academies Press. https://doi.org/10.17226/12187

UN Office of the Secretary-General’s Envoy on Technology. (n.d.). Digital Human Rights.Retrieved October 18, 2022, from https://www.un.org/techenvoy/content/digital-human-rights

Zou, J., & Schiebinger, L. (2018). AI can be sexist and racist—It’s time to make it fair. Nature, 559(7714), 324–326. https://doi.org/10.1038/d41586-018-05707-8

Domestic robots have the potential to improve quality of life through performing household tasks as well as providing personal assistance and care. To be successful, domestic robots need to be able to work in households with different physical environments as well as user types, values, and power relations.

Gendered Innovations:

1. Understanding the Needs and Preferences of Diverse Households

2. Value Alignment between Robot and Household

3. Overcoming Domain Gaps between Training and Deployment Environments

4.Addressing Domestic and Global Power Dynamics