Sex, Gender, & Intersectional Analysis

Case Studies

- Science

- Health & Medicine

- Chronic Pain

- Colorectal Cancer

- Covid-19

- De-Gendering the Knee

- Dietary Assessment Method

- Gendered-Related Variables

- Heart Disease in Diverse Populations

- Medical Technology

- Nanomedicine

- Nanotechnology-Based Screening for HPV

- Nutrigenomics

- Osteoporosis Research in Men

- Prescription Drugs

- Systems Biology

- Engineering

- Assistive Technologies for the Elderly

- Domestic Robots

- Extended Virtual Reality

- Facial Recognition

- Gendering Social Robots

- Haptic Technology

- HIV Microbicides

- Inclusive Crash Test Dummies

- Human Thorax Model

- Machine Learning

- Machine Translation

- Making Machines Talk

- Video Games

- Virtual Assistants and Chatbots

- Environment

Machine Translation: Analyzing Gender

The Challenge

Machine translation (MT) becomes increasingly important in a global world. Although error rates are still high, MT system accuracies are improving incrementally. Some errors in current systems, however, are based on fundamental technological challenges that require non-incremental solutions. One such problem is related to gender: State-of-the-art translation systems like Google Translate or Systran massively overuse masculine pronouns (he, him) even where the text specifically refers to a woman (Minkov et al., 2007). The result is an unacceptable infidelity of the resulting translations and perpetuation of gender bias.

Method: Analyzing Gender

The reliance on a "masculine default" in modern machine translation systems results from current systems that do not determine the gender of each person mentioned in a text. Instead, the translation is produced by finding all the possible matches for a given phrase in large collections of bilingual texts, and then choosing a match based on factors such as its frequency in large text "corpora" (or bodies of text). Masculine pronouns are over-represented in the large text corpora that modern systems are trained on, resulting in over-use in translations. In July 2012 the Gendered Innovations project convened a workshop to discuss potential solutions. Improving feminine-masculine pronoun balance in these corpora, for example, would still not fix the problem, since it will simply cause both women and men to be randomly referred to with the wrong gender. Instead, it is crucial to develop algorithms that explicitly determine the gender of each person mentioned in text and use this computed gender to inform the translation. Such algorithms could avoid the masculine default and also increase the quality of translation overall.

Gendered Innovations:

1. Studying the Male Default in Machine Translation

2. Detecting the Gender of Entities to Improve Translation Algorithms (research in progress)

3. Introducing Gender-Diverse Translations

4. Integrating Gender Analysis into the Engineering Curriculum

Gendered Innovation 1. Studying the Male Default in Machine Translation

Method: Analyzing Gender

Gendered Innovation 2. Detecting the Gender of Entities to Improve Translation Algorithms

Method: Rethinking Research Priorities and Outcomes

Gendered Innovation 3: Introducing Gender-Diverse Translations

Gendered Innovation 4. Integrating Gender Analysis into the Engineering Curriculum

Conclusions

The Challenge

Machine Translation (MT) is an important area of Natural Language Processing (NLP) and a crucial application for an increasingly globalized world. Although machine translation error rates are still high, system accuracies are improving as developers make incremental improvements. Some errors in current systems, however, are based on fundamental technological challenges that require non-incremental solutions. One such problem is gender: State-of-the-art translation systems like Google Translate or Systran massively overuse male pronouns (he, him) even where the text is actually referring to a woman (Minkov et al., 2007). The result is an unacceptable infidelity of the resulting translations and perpetuation of gender bias.

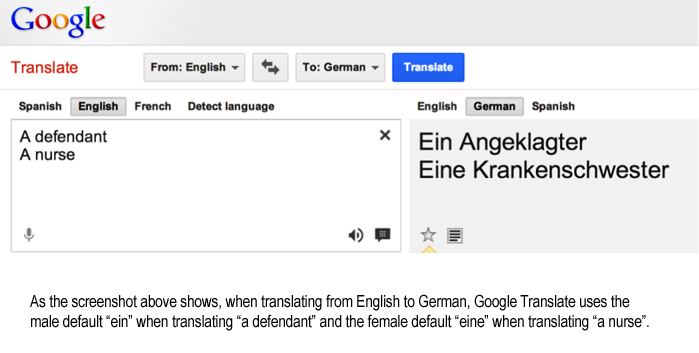

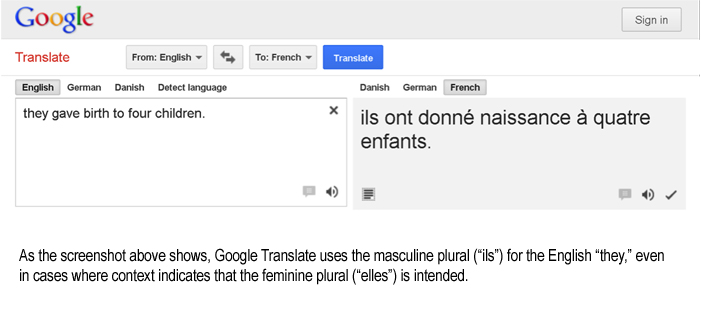

The problem can occur whether translating from English to other languages, or from other languages to English. It is especially common in translating from a weakly gender-inflected language (such as English) to a more strongly gender-inflected language (such as most other Indo-European languages) (Banea et al., 2008). For example, in the English phrase "a defendant was sentenced" it is unclear whether the defendant is a woman or man. When translated into German, this phrase must be rendered in one of two forms—a form specifying a woman defendant or a form specifying a man defendant (Frank et al., 2004):

| English: | German: |

| "A defendant was sentenced." | "Ein Angeklagter wurde verurteilt." |

| (The gender of the defendant is unspecified.) | (The defendant is specified as being a man&mdas;the current Google Translate default.) or

"Eine Angeklagte wurde verurteilt." |

Humans are able to use the context (the previous or following sentences in the document) to ascertain the gender of the defendant. Current machine translation systems, however, are incapable of using previous or following sentences. The result is that systems simply rely on frequency, choosing whichever gender is more frequently used for this exact phrase—"ein Angeklagter wurde" or "eine Angeklagte wurde"—in the text corpus. Mostly this results in a male default, although sometimes the default is female—see figure below.

This problem also occurs when translating from other languages into English. For example, the Spanish pronoun "su" can translate into English as "his" or "her." This problem is common when translating from a language in which pronouns are often omitted (a "pro-drop" language like Spanish, Chinese, and Japanese) into a language like English that does not omit pronouns.

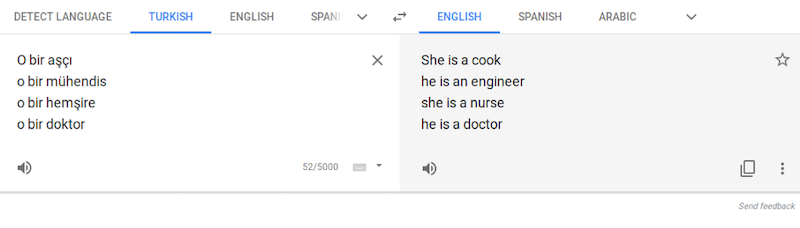

Turkish provides another example. The gender-neutral pronoun "o" is translated into English as "he" or "she" in a manner that reflects stereotypical assumptions, such as: "she is a cook," "he is an engineer," "she is a nurse," or "he is a doctor." The same is true for Finnish, Estonian, Hungarian, and Persian (Caliskan et al., 2017, supplement).

Gendered Innovation 1: Studying the Male Default in Machine Translation

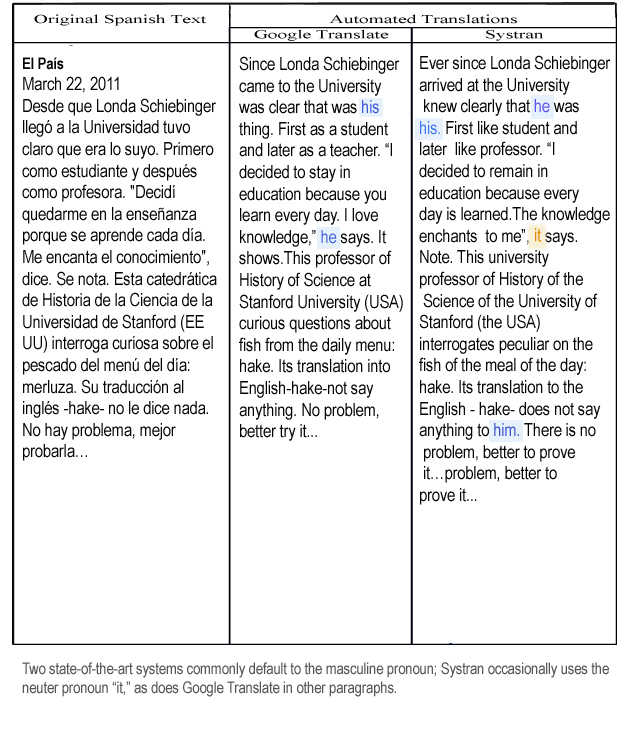

Translating from Spanish to English causes a number of gender problems with modern machine translation software. One reason is that Spanish is a pro-drop language. Determining which pronoun to use when translating into English is thus particularly difficult. In March 2011, for example, Londa Schiebinger was interviewed in a Spanish newspaper. Translations into English of the resulting Spanish language article reveal symptoms of the difficulty—see table below.

These automated translations use incorrect pronouns in spite of the many cues to the correct gender in the text, such as:

- "Londa" is a woman's name in English, and is listed as such on online name lists.

- The Spanish source text contains gender-inflected words such as "professor" (profesora, feminine form) and descriptions such as "mujer" (woman) that indicate that "Londa" is a woman.

- "no le dice nada."

- that literally reads:

- "doesn't say anything to him/her."

- the Spanish word "le" ("him/her") must mean "her," and not "him."

Method: Analyzing Gender

Machine translation systems have difficulties generating the correct gender for entities because they currently have not solved three basic problems required to make use of the cues to gender that human readers rely on:

- 1. Human translators know that a good translation is one that has the same meaning as the original.

- 2. Human readers recognize when people are being talked about in a text, and represent facts about the people they are reading about, like their gender. They do this even when the people are not mentioned explicitly. For example, a verb that has no pronoun in a pro-drop language needs to be translated with a pronoun in a language like English. A human translator realizes that the phrase:

- "'Me encanta el conocimiento,' dice."

- that literally reads:

- "'I like knowledge,' said."

- is referring to a person who said something, and hence should be translated

- "'I like knowledge,' she said."

- 3. Human readers rely on information about which nouns and pronouns corefer in neighboring sentences. For example, the fact that "Londa" is mentioned in the first sentence as the subject of the interview tells a human that the phrase "sus trabajos," or "her/his work," is referring to Londa's work, and hence the translated pronoun should have the same gender as Londa, and appear as "her" and not "his." Human readers know that texts are coherent in this way; they don't just jump randomly from person to person in different sentences. Human translators can recognize coreference in the language they are translating from, and produce coreference in the language they are translating into.

- 1. Our current systems pick a translation, not because it has the same meaning as the original, but because it is most likely to use many of the same words or phrases as a human might use in translating. These are similar but not identical requirements.

- 2. Our current systems do not understand that these sentences are referring to people who have gender. They do not represent gender at all.

- 3. Our current systems do not compute coreference. Current systems are very limited in their ability to use context: They translate a single sentence at a time, and hence are completely unable to use information from previous sentences (like the mention of "Londa") to help in translating following sentences.

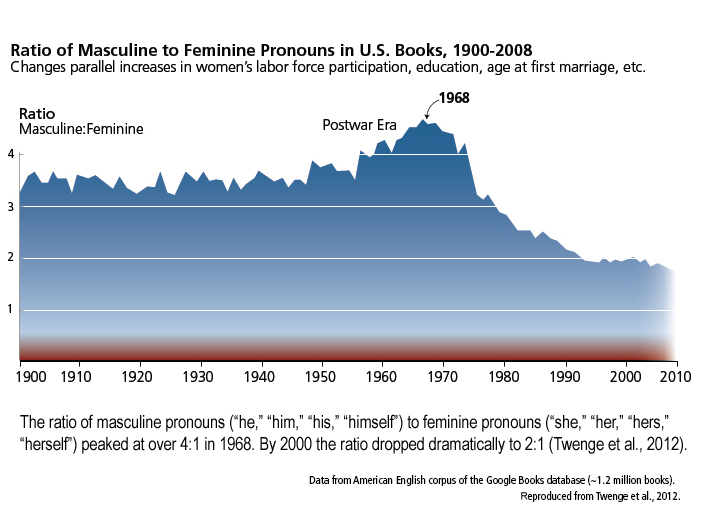

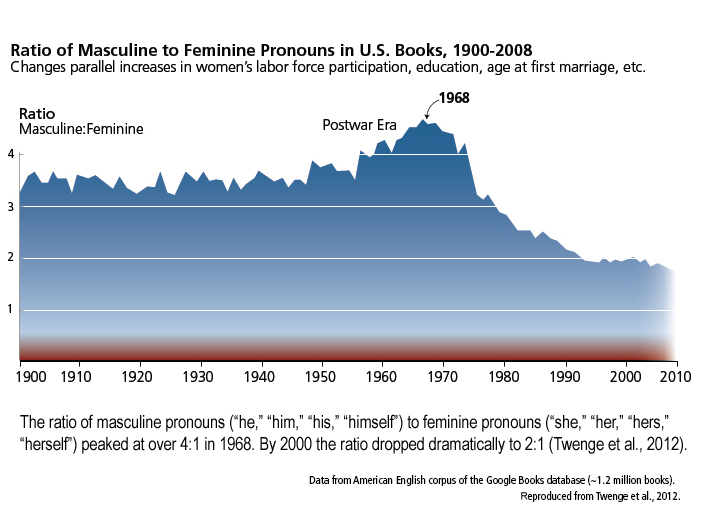

MT systems are trained on two separate kinds of text corpora. One is a "parallel corpus" that has a text in one language aligned with its translation in a second language. The other is a large monolingual corpus in just one language that is used to model the grammar of the language being translated into. Both of these types of corpora can lead to errors in pronoun gender. One study on a large monolingual corpus of English, the Google Books corpus showed that masculine pronouns are significantly more frequent in English than feminine pronouns, although this ratio has decreased over time—see chart below. This bias is likely what led to the male pronouns that appeared in the interview example above.

Major organizations now favor or require inclusive language (Rose, 2010). In English, "she or he" is often used when referring to a person whose sex is unknown. Often, recasting a sentence in the plural solves the problem of referents (see Rethinking Language and Visual Representations). As a result, translation algorithms that use "he" as a generic are out of step with modern usage.

Machine translation that does not factor in current language conventions also runs the risk of reinforcing gender inequality. When translation programs default to "he said," the occurrence of the masculine pronoun on the web increases. This may lead to the long-term, unintended consequence of reversing the positive trend of equality in language portrayed on the graph above.

A new review of the literature focuses not only on bias related to women but also non-binary people to overcome the “linguistic visibility” of this group (Kostikova, 2023).

Gendered Innovation 2: Detecting the Gender of Entities to Improve Translation Algorithms

We suggest the development of a translation algorithm that will identify the gender of a referenced person and hence generate translations with the correct pronouns. The algorithm would be based on coreference resolution (Ng et al., 2002; Zhou et al., 2004); i.e., deciding which names, noun phrases, or pronouns mentioned in a text refer to the same real-world person or entity. This task proceeds in roughly three stages:

1. Find all the entities (names, pronouns, nouns) mentioned in a text.

2. Determine the possible animacy (human or inanimate), gender (masculine or feminine) and number (singular and plural) of each of these mentions.

3. Use probabilistic algorithms that rely on the structure of the entire discourse, the grammatical structure of each individual sentences, and the potential animacy, gender, and number of each entity to group together those mentions which are likely to corefer.

Coreference would have to be run for English, and for every language that we are translating from and into. Well-performing coreference algorithms now exist for English and a few other languages (Chinese and Arabic, among others). The performance of these algorithms (Fernandes et al., 2012; Chen et al., 2012; Lee et al., 2013) is still not extremely high, but high enough to suggest that they might help improve gender assignment in modern MT algorithms.

Coreference resolution for machine translation would also need to solve the subproblem of detecting that a pronoun must be inserted when translating a sentence from a pro-drop language like Spanish or Chinese to a pronoun-required language like English. This task is referred to as "zero-anaphora detection," and computational literature is beginning to propose successful methods for detecting these zeros (Zhao et al., 2007; Kong et al., 2010; Iida et al., 2011). The next step is to incorporate these zero detection algorithms into state-of-the-art coreference and machine translation systems.

The algorithm additionally would require that machine translation systems be able to take into consideration information from other sentences before and after the current one. Recent algorithms for "document-level language modeling" may help in modeling the linguistic context cross sentences (Momtazi et al., 2010). New "document-wide decoding" algorithms have only recently made it possible to incorporate such long-distance information into the translation algorithms themselves (Hardmeier et al., 2012).

Finally, the algorithm would require changing the way translations are optimized to incorporate information about meaning of the sentence, or at least meaning about the successful recognition of entities, coreference, and coherence.

Of course many additional challenges will arise in developing these algorithms, and any of our suggestions for solutions to these subproblems may fail, requiring alternative directions. The key insight is that solving the gender bias in machine translation will require explicit detection of gender for people and use coreference information to inform the translation.

Method: Rethinking Research Priorities and Outcomes

Such algorithms also have the potential to increase the performance of machine translation in people-rich genres like fiction, where current translation systems fail due to their inability to successfully model coherence and coreference (Voigt et al., 2012).

Gendered Innovation 3: Gender-Diverse Translations

Google Translate attempts to promote fairness by providing both feminine and masculine translations for some gender-neutral words. For example, for the gender-neutral phrase in Turkish, “o bir doktor,” Google Translate will now show “she is a doctor” and “he is a doctor” (instead of simply “he is a doctor”) (Kuczmarski, 2018).Further inclusive solutions might include non-binary gender translations. People who don’t catergorize themselves as women or men may use the terms “they/them” in English or “hen” in Swedish. “Hen” is a gender-neutral pronoun intended as an alternative to the gender-specific “hon” (she) and “han” (he).

Gendered Innovation 4: Integrating Gender Analysis into the Engineering Curriculum

A deeper fix will be to integrate an awareness of gender into the computer science curriculum so that engineers don't make such errors in the future (see video on integrating ethics into CS curriculum-Grosz et al., 2019). It is important that schools of engineering include basic information on gender analysis in their courses for any field with a human endpoint. If engineers graduating from Stanford had learned the basics of gender analysis, they would not have unconsciously developed a translation program that defaulted to the male pronoun. Design should be gender inclusive from the beginning so that companies do not have to retrofit technologies for the excluded gender, whether that is women, men, or gender-diverse people.Many schools of engineering and especially departments of computer science have redesigned their curriculum to attract more women into their fields. That’s important. But we also need curriculum reform to teach computer science and engineering students sophisticated methods of sex and gender analysis.

Conclusions

Integrating each of these methods into modern machine translation systems is challenging, and will likely require new algorithms, new understandings, and new tools. Systems that explicitly understand when people are mentioned in a text, and use rich contextual information to understand more information about these people, including their gender, have the potential to improve the readability and quality of machine translations.

Works Cited

Arnold, D., Sadler, L., & Humphreys, R. (1993). Evaluation: An Assessment. Machine Translation, 8 (1-2), 1-24.

Babych, B., & Hartley, A. (2003). Improving Machine Translation Quality with Automatic Named Entity Recognition. Proceedings of the 7th International Conference on Empirical Methods in Natural Language Processing (EMAT), Budapest, April 13

Banea, C., Mihalcea, R., Wiebe, J., & Hassan, S. (2008). Multilingual Subjectivity Analysis Using Machine Translation. Proceedings of the Association for Computational Linguistics 12th Annual Conference on Empirical Methods in Natural Language Processing (EMNLP), Honolulu, October 25-27.

Bergsma, S. (2005). Automatic Acquisition of Gender Information for Anaphora Resolution. Proceeding of Advances in Artificial Intelligence, 18th Conference of the Canadian Society for Computational Studies of Intelligence, Victoria, May 9-11.

Bergsma, S., Lin, D., & Goebel, R. (2009). Glen, Glenda or Glendale: Unsupervised and Semi-Supervised Learning of English Noun Gender. Proceedings of the 13th Conference on Computational Natural Language Learning, Boulder, Colorado, June 4-5.

Byron, D. (2001). The Uncommon Denominator: A Proposal for Consistent Reporting of Pronoun Resolution Results. Computational Linguistics, 27 (4), 569-577.

Caliskan, A., Bryson, J., & Narayanan, A. (2017). Semantics derived automatically from language corpora contain human-like biases. Science, 356 (6334), 183-186.

Chen, C. & Ng, V. (2012). Combining the Best of Two Worlds: A Hybrid Approach to Multilingual Coreference Resolution. Proceedings of the 24th International Conference on Computational Linguistics, Mumbai, December 8-15.

Frank, A., Hoffmann, C., & Strobel, M. (2004). Gender Issues in Machine Translation. Lingenio Gmbh, Heidelberg.

Fernandes, E., Nogueira dos Santos, C., & Milidiú R. (2012). Latent Structure Perceptron with Feature Induction for Unrestricted Coreference Resolution. Proceedings of the Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning, Jeju Island, Korea, July 12-14, 41-48.

Grosz, B.J., Grant, D. G., Vredenburgh, K., Behrends, J., Hu, L., Simmons, A., % Waldo, J. (2019). Communications of the ACM, 62 (8), 54-61.

Hardmeier, C., Nivre, J.,& Tiedemann, J. (2012). Document-Wide Decoding for Phrase-Based Statistical Machine Translation. Proceedings of the Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning, Jeju Island, Korea, July 12-14, 1179-1190.

Hovy, E., Marcus, M., Palmer, M., Ramshaw, L., & Weischedel, R. (2006). OntoNotes: The 90% Solution. Proceedings of the Human Language Technology Conference of the North American Chapter of the Association for Computational Linguistics, New York, June, 57-60.

Iida, R., & Poesio, M. (2011). A Cross-Lingual ILP Solution to Zero Anaphora Resolution. Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics, Portland, Oregon, June 19-24, 804-813.

Johnson, M. (2020). A Scalable Approach to Reducing Gender Bias in Google Translate.

Kong, F. & Zhou, G. (2010). A Tree Kernel-based Unified Framework for Chinese Zero Anaphora Resolution. Proceedings of the Conference on Empirical Methods in Natural Language Processing, Cambridge, Massachusetts, October 9-11.

Kostikova, A. (2023). Gender-neutral Language Use in the Context of Gender Bias in Machine Translation (A Review Literature). Journal of Computational and Applied Linguistics, 1, 94-109.

Kuczmarski, J. (2018). Reducing Gender Bias in Google Translate, https://www.blog.google/products/translate/reducing-gender-bias-google-translate/. Accessed 2 July 2019.

Lee, H., Chang, A., Peirsman, Y., Chambers, N., Surdeanu, M., & Jurafsky, D. (2013). Deterministic Coreference Resolution Based on Entity-Centric, Precision-Ranked Rules. Computational Linguistics, 39(4).

Minkov, E., Toutanova, K., & Suzuki, H. (2007). Generating Complex Morphology for Machine Translation. Proceedings of the 45th Annual Meeting of the Association of Computational Linguistics, June 23-30, Prague.

Momtazi, S., Faubel, F., & Klakow, D. (2010). Within and Across Sentence Boundary Language Model. Proceedings of Interspeech, Makuhari, Japan, September 26-30.

Ng, V. & Cardie, C. (2002). Improving Machine Learning Approaches to Coreference Resolution. Proceedings of Association for Computational Linguistics, Philadelphia, July, 104-111.

Pradhan, S., Ramshaw, L., Marcus, M., Palmer, M., Weischedel, R., & Xue, N. (2011). Conll-2011 Shared Task: Modeling Unrestricted Coreference in Ontonotes. Proceedings of the 15th Conference on Computational Natural Language Learning, Portland, Oregon, June 23-24,1-27.

Rose, L. (2012). The Supreme Court and Gender-Neutral Language: Setting the Standard or Lagging Behind? Duke Journal of Gender Law and Policy, 17 (1), 81-131.

Twenge, J., M., Campbell, W., & Gentile, B. (2012). Male and Female Pronoun Use in U.S. Books Reflects Women's Status, 1900-2008. Sex Roles, 67, (9-10), 488-493.

U.S. Social Security Administration. (2012). Popular Baby Names: National Data. Washington, D.C.: Government Publishing Office (GPO).

Vogel, A., & Jurafsky, D. (2012). He Said, She Said: Gender in the Association for Computational Linguistics Anthology. Proceedings of the ACL-2012 Special Workshop on Rediscovering 50 Years of Discoveries, Jeju Island, Korea, July 10, 33-41.

Voigt, R., & Jurafsky, D., (2012). Towards a Literary Machine Translation: The Role of Referential Cohesion. Proceedings of the North American Chapter of the Association for Computational Linguistics Workshop on Computational Linguistics for Literature, Montreal, June.

Zhao, S., & Ng, H. (2007). Identification and Resolution of Chinese Zero Pronouns: A Machine Learning Approach. Proceedings of Empirical Methods in Natural Language Processing and Computational Natural Language Learning Joint Conference, Prague, June, 541-550.

Zhou, G., & Su, J. (2004). A High-Performance Coreference Resolution System using a Constraint-based Multi-Agent Strategy. Proceedings of the 20th International Conference on Computational Linguistics, Stroudsburg, Pennsylvania.

A couple of years ago, I was in Madrid and was interviewed by some Spanish newspapers. When I returned home, I ran the articles through Google Translate and was shocked that I was referred to repeatedly as "he." "Londa Schiebinger," "he" said, "he" wrote, "he" thought. Google Translate and its European equivalent, SYSTRAN, have a male default.

How could such a "cool" company as Google make such a fundamental error?

Google Translate defaults to the masculine pronoun because "he said" is more commonly found on the web than "she said." Here is the interesting part (see graph below).

We know from NGram (another Google product) that the ratio of masculine to feminine pronouns has fallen dramatically from a peak of 4:1 in the 1960s to 2:1 since 2000. This exactly parallels the women's movement and massive governmental funding to increase the number of women in science. With one algorithm, Google wiped out forty years of revolution in language and they didn't mean to. This is unconscious gender bias.

Gendered Innovation:

The fix? July 2012, the Gendered Innovations project held a workshop where we invited two natural language processing experts, one from Stanford and one from Google. They listened for about twenty minutes, they got it, and they said, "we can fix that!" Fixing it is great, but constantly retrofitting for women is not the best road forward. It turns out that this is a difficult problem that has not yet been fixed. To avoid such problems in the future, it is crucial that computer scientists design with an awareness of gender from the very beginning.

Importantly, the current masculine default in machine translation may have unintended, long-term consequences. When translation programs default to "he said," the occurrence of the masculine pronoun on the web increases (which will reverse the positive trend of equality in language portrayed on the graph above). We are building a future that reinforces gender inequality when we may not intend to.

A deeper fix will be to integrate gender studies into the engineering curriculum so that engineers don't make such errors in the future.