Sex, Gender, & Intersectional Analysis

Case Studies

- Science

- Health & Medicine

- Chronic Pain

- Colorectal Cancer

- Covid-19

- De-Gendering the Knee

- Dietary Assessment Method

- Gendered-Related Variables

- Heart Disease in Diverse Populations

- Medical Technology

- Nanomedicine

- Nanotechnology-Based Screening for HPV

- Nutrigenomics

- Osteoporosis Research in Men

- Prescription Drugs

- Systems Biology

- Engineering

- Assistive Technologies for the Elderly

- Domestic Robots

- Extended Virtual Reality

- Facial Recognition

- Gendering Social Robots

- Haptic Technology

- HIV Microbicides

- Inclusive Crash Test Dummies

- Human Thorax Model

- Machine Learning

- Machine Translation

- Making Machines Talk

- Video Games

- Virtual Assistants and Chatbots

- Environment

Domestic Robots: Intersectional Approaches

The Challenge

Domestic robots have the potential to improve quality of life through performing household tasks as well as providing personal assistance and care. To be successful, domestic robots need to be able to work in households with different physical environments as well as user types, values, and power relations.

Method: Analyzing Gender and Intersectionality in Social Robots

There are many complexities governing the use of domestic robots. These robots need to be designed in different ways for diverse living spaces, building styles, household users, and non-humans in the space, such as pets or plants. Robots can impact social dynamics in the household and the broader labor ecosystems. It is important to consider ways the robots can engender trust, meet functional needs of users, and allow for customizability that fits the values of the people in the home. To achieve these goals, robot designers may incorporate different forms of intersectional approaches and engage potential household end-users in co-creation and participatory design—from the first to the final steps of the design process.

Gendered Innovations:

1. Understanding the Needs and Preferences of Diverse Households allows for robots to be used in various physical environments and by multiple users within the house.

2. Value Alignment between Robot and Household is a critical part of the design process that involves understanding the individual household's values and norms—including how cultural and gender differences affect household tasks.

3. Overcoming Domain Gaps Between Training and Deployment:There is often a mismatch between training scenarios and deployment environments in terms of physical space and interpersonal relations. Closing the domain gap through diversity in training tasks, settings, and evaluation metrics is crucial for successful use of domestic robots.

4. Addressing Domestic and Global Power Dynamics: Roboticists must consider how robots can impact social dynamics both in the house and the broader labor market.

Gendered Innovation 1: Understanding the Needs and Preferences of Diverse Households

Gendered Innovation 2: Value Alignment between Robot and Household

Gendered Innovation 3: Overcoming Domain Gaps Between Training and Deployment

Gendered Innovation 4: Addressing Domestic and Global Power Dynamics

Next Steps

The Challenge

Domestic robots have the potential to improve the quality of human life through performance of household tasks as well as providing personal assistance and care. Users may include older adults who need additional support to stay in the home; children who need educational support; people with specific conditions or disabilities who need assistive care; and people who need general assistance with household tasks (e.g., cleaning, cooking, folding clothes). Many of these tasks typically fall on the shoulders of women in ways compounded by class and economic status. It is important to consider the intersectional aspects of robot design across diverse environments.

It is also important to consider ways the robots can engender trust, meet functional needs of users, and allow for customizability that fits the values of the people in the home. These values can differ by culture and by type of household (e.g., single, family, roommates), as well as among the individuals within a household. They are flexible and may shift over time. To achieve these goals, robot designers may incorporate different forms of intersectional analysis in the design process and work together with potential household end-users in the design of robots—from the first to the final steps of the process.

Gendered Innovation 1: Understanding the Needs and Preferences of Diverse Households

Home robots need to support the accessibility, dignity, preferences, and self-efficacy of a variety of people living in different types of households (fig. 1). These may include:

-

• people living alone, families, roommates, halfway houses, communal homes

• people with disabilities

• people of different ages, heights, and weights

• people across an array of genders, ethnicities and races, languages, and cultures

• lodgers and guests

• non-humans, especially pets and plants.

-

• stand-alone houses

• townhouses

• apartments.

-

• smart homes

• infrastructures (electricity, outlets, Internet).

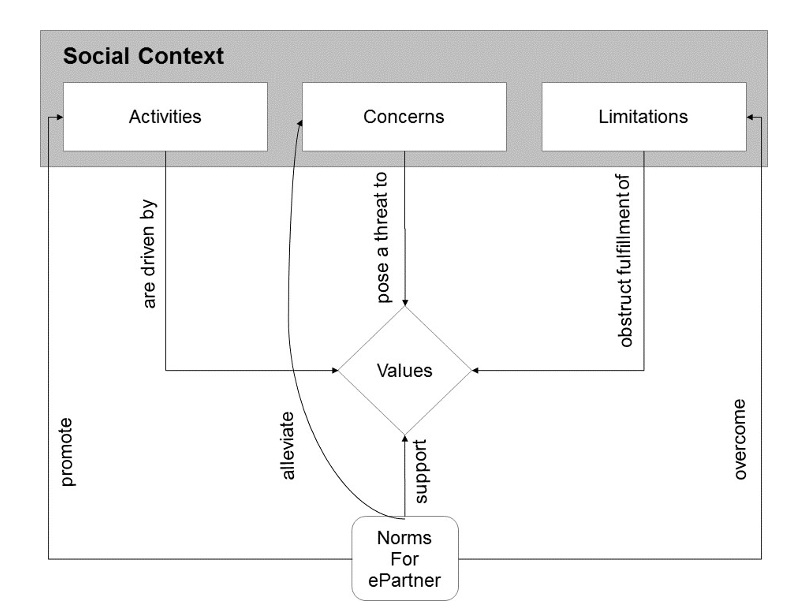

An iterative design process can enable designers to check their assumptions and better understand the consequences of robot use (for gender, see Gender Impact Assessment). Including users in the design process increases the likelihood of solutions that reflect their diverse needs and preferences.

Fig. 1. The Design Thinking Process along with intersectional analysis can be used to develop more inclusive home robot design. Stanford d.School, with permission.

Gendered Innovation 2: Value Alignment between Robot and Household

The activities, behaviors, communication, and data processing of the robot should be aligned with:

-

• the values of the individual household members (e.g., their domestic space, level of privacy, autonomy, equality, tidiness, and self-efficacy)

• the household’s norms (e.g., responsibilities and power relations).

Method: Co-Creation and Participatory Design

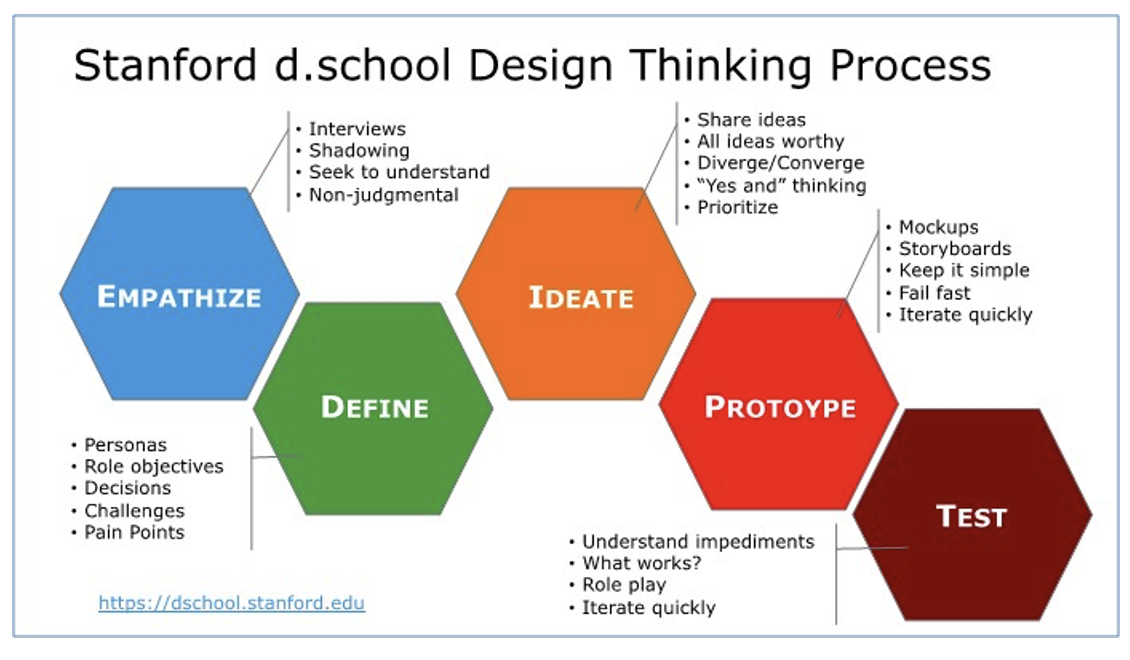

A key step is building a value-norm model into the knowledge base of the robot to allow it to act as a partner in household tasks (see fig. 2).

This value-norm model needs to be instantiated for the specific robot household tasks (e.g., keeping distance and processing data about perceived dirt). The robot should be able to explain the norm and its behaviors in relation to the norms to diverse household members (e.g., the origin and reason for the norm) (Miller, 2019). Note that the norms should support inclusiveness (e.g., specific support needs for household members with disabilities, which may require greater robot autonomy).

Fig. 2: Value-norm model (Kayal et al., 2019).

For more on value-sensitive design, refer to Friedman et al. (2017); for value elicitation methods and value story workshop, refer to Harbers et al. (2015); and for grounded theory, refer to Kayal et al. (2019) and Cremers et al. (2014).

Users need to understand how choices about certain values may affect the robot’s performance. For example, if the household chooses to keep data local to preserve privacy, the robot might learn more slowly (fig. 3).

Fig. 3: Domestic robots and their users will need to establish norms and make agreements

on robot activities that involve value tensions. Photo Credit: Pikbest.

Gendered Innovation 3: Overcoming Domain Gaps between Training and Deployment Environments

Robots need to perform with a variety of people in diverse domestic environments. Disparities between design and deployment may result from:

-

• A mismatch between training scenarios vs. deployment environments.

• This mismatch may be present in robotic visual perception (Wang et al., 2019). A robot’s visual perception must be trained to recognize diversity, for example, in handedness (right-handed, left-handed, or ambidextrous) for handover tasks (Kennedy et al., 2017).

• This mismatch may be present in robotic language perception. For example, designers can anticipate reduced speech recognition for specific groups, such as children, and build multimodal repair mechanisms into the dialogue so that the robot can understand and continue the conversation (Ligthart et al., 2020).

• A mismatch between the developer and home users in terms of expectations for and perceptions of the robot (Lee et al., 2014). For example, users and roboticists may differ in their perceptions of robots, especially humanoid and social ones (Thellman et al., 2022; Gendering Social Robots). Biased perceptions can occur from the side of the users (Tay et al., 2014; Perugia et al., 2022) or the roboticists (Robertson, 2010; Seaborn & Frank, 2022).

These mismatches can be grounded in the background information available to roboticists as they build systems (fig. 4). This can include problem definition, feature selection, and machine learning data sources used in training scenarios versus in deployment environments.

Fig. 4. Domain Gap in a real house (left) vs. a house in a robotic simulator (right). Photo credit: Selma Šabanović; Ruohan Zhang, BEHAVIOR-1K developed by Stanford Vision and Learning Lab, with permission.

Closing the domain gaps between training and deployment requires diversity in training tasks, environments, and evaluation metrics. Developers can account for this diversity in demonstrations, instructions, and interactions. They can further ensure that robots meet user needs by involving potential end-users in the design process (Olivier et al., 2022; Barnes et al., 2017; Lee et al., 2017).

New machine learning methods, such as multi-task learning and adversarial training, may also be used to alleviate model bias (Benton et al., 2017; Zhang et al., 2018). These methods have the potential to improve performance for diverse users in different environments but have not yet been widely applied to robotics. Further, it is important to critically consider the sources of data, methods of feature extraction, and resulting machine learning models to identify and address potential sources of bias. Limitations should be clearly specified in robot designs and applications.

Gendered Innovation 4: Addressing Domestic and Global Power Dynamics

The introduction of robots into households can deliberately or unintentionally affect social dynamics between various people.

Domestic power dynamics. Robots may intervene in household interactions. A robot microphone that turns to face a person who has not spoken much might inspire more equitable turn-taking in conversation (Tennent et al., 2019). A robot that responds to negative or angry interactions in the home by cringing or shying away may prompt members of the household to reflect on their interactions and potentially ameliorate them (Rifinski et al., 2021). It is therefore important to consider how the robot might directly or indirectly impact the power dynamics in the home. Involving end-users and customizing the robot’s performance within real household contexts may allow for a balance between designer prescription of robot responses and user control during such interactions.

Physical abuse. Should robots be designed to intervene in domestic abuse? Initial research shows that children are more likely to report abusive behavior to robots than to adults (Bethel et al., 2016). How should the robot respond to dangerous situations? Should robots be designed to include reporting capabilities? And to whom will they report? Will users need to opt in/out of these features? Who in the household will decide—especially in cases of disagreement between household members? User-sensitive work is needed to address these open questions.

Overcoming stereotypes. Robots deployed in homes may have the potential to challenge the gendered nature of housework. The Roomba, for example, inspired teens and men to do more vacuuming (Forlizzi, 2007). Robots may also remind household members to do chores, thus removing this emotional labor from women (Dobrosovestnova et al., 2021).

Broader power dynamics. As long as robots are not fully autonomous, they may be partially controlled by remote workers—the gig economy may move from MTurk and into robotics in the near future (e.g., Mandlekar et al., 2018). In some cases, this can have positive outcomes as exemplified by DAWN Avatar Robot Café in Tokyo. The DAWN robots are primarily controlled remotely by people with disabilities, thus engaging diverse people into broader social networks. Yet, equity is not a given. In the global service economy, care robots used in developed countries are being teleoperated by people from low- and middle-income countries, raising critical questions about labor conditions, global labor extraction, and flows (Guevarra, 2018). Robots may need to challenge existing norms in deployed environments. Norm-critical innovation questions power relations that may perpetuate various forms of discrimination.

Conclusions

Domestic robots have the potential to greatly improve the quality of human life by both taking on chores and household maintenance and providing personal assistance and care. It is important to center the intersectional aspects of the robot design by allowing for co-design of these robots between roboticists and users. For equity and quality of performance, developers must consider ways that robots can engender trust, meet the functional needs of diverse users, and allow for customizability that fits the range of values within a home. It is also important to consider ways to improve household processes and not simply use the robots as a band-aid for a system that requires improved processes. Future domestic robots may, for instance, be sensitive to the social roles of household members and their interactions, including negative ones, and respond in an ethical, value-centered fashion.

Next Steps

1. This case study has focused on robots in domestic spaces. It will also be important to consider interactions with systems outside the household, e.g., delivery, maintenance repair of the robots, recycling, social services, and possible remote control of the robot.

2. Domestic robots may have broader societal impacts, such as displacing human house cleaners. Local and national governments should consider the broader impacts of automation and develop programs to reskill workers to meet the needs of the future.

Works Cited

Barnes, J., FakhrHosseini, M., Jeon, M., Park, C.-H., & Howard, A. (2017). The influence of robot design on acceptance of social robots. 2017 14th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), 51–55. https://doi.org/10.1109/URAI.2017.7992883

Benton, A., Mitchell, M., & Hovy, D. (2017). Multi-task learning for mental health using social media text (arXiv:1712.03538). arXiv. https://doi.org/10.48550/arXiv.1712.03538

Bethel, C. L., Henkel, Z., Stives, K., May, D. C., Eakin, D. K., Pilkinton, M., Jones, A., & Stubbs-Richardson, M. (2016). Using robots to interview children about bullying: Lessons learned from an exploratory study. 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), 712–717. https://doi.org/10.1109/ROMAN.2016.7745197

Cremers, A. H. M., Jansen, Y. J. F. M., Neerincx, M. A., Schouten, D., & Kayal, A. (2014). Inclusive design and anthropological methods to create technological support for societal inclusion. In C. Stephanidis & M. Antona (Eds.), Universal Access in Human-Computer Interaction. Design and Development Methods for Universal Access (pp. 31–42). Springer International Publishing. https://doi.org/10.1007/978-3-319-07437-5_4

Dobrosovestnova, A., Hannibal, G., & Reinboth, T. (2022). Service robots for affective labor: A sociology of labor perspective. AI & SOCIETY, 37(2), 487–499. https://doi.org/10.1007/s00146-021-01208-x

Forlizzi, J. (2007). How robotic products become social products: An ethnographic study of cleaning in the home. 2007 2nd ACM/IEEE International Conference on Human-Robot Interaction (HRI), 129–136. https://doi.org/10.1145/1228716.1228734

Friedman, B., Hendry, D. G., & Borning, A. (2017). A Survey of value sensitive design methods. Foundations and Trends® in Human–Computer Interaction, 11(2), 63–125. https://doi.org/10.1561/1100000015

Guevarra, A. R. (2018). Mediations of care: Brokering labour in the age of robotics. Pacific Affairs, 91(4), 739–758. https://doi.org/10.5509/2018914739

Harbers, M., Detweiler, C., & Neerincx, M. A. (2015). Embedding stakeholder values in the requirements engineering process. In S. A. Fricker & K. Schneider (Eds.), Requirements Engineering: Foundation for Software Quality (pp. 318–332). Springer International Publishing. https://doi.org/10.1007/978-3-319-16101-3_23

Kayal, A., Brinkman, W.-P., Neerincx, M. A., & van Riemsdijk, M. B. (2019). A user-centred social commitment model for location sharing applications in the family life domain. International Journal of Agent-Oriented Software Engineering, 7(1), 1–36.

Kennedy, J., Lemaignan, S., Montassier, C., Lavalade, P., Irfan, B., Papadopoulos, F., Senft, E., & Belpaeme, T. (2017). Child speech recognition in human-robot interaction: Evaluations and recommendations. Proceedings of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, 82–90. https://doi.org/10.1145/2909824.3020229

Lee, H. R., Šabanovic, S., & Stolterman, E. (2014). Stay on the boundary: Artifact analysis exploring researcher and user framing of robot design. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, 1471–1474. https://doi.org/10.1145/2556288.2557395

Lee, H. R., Šabanović, S., Chang, W.-L., Nagata, S., Piatt, J., Bennett, C., & Hakken, D. (2017). Steps Toward Participatory Design of Social Robots: Mutual Learning with Older Adults with Depression. Proceedings of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, 244–253. https://doi.org/10.1145/2909824.3020237

Ligthart, M. E. U., Neerincx, M. A., & Hindriks, K. V. (2020). Design patterns for an interactive storytelling robot to support children’s engagement and agency. Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, 409–418. https://doi.org/10.1145/3319502.3374826

Mandlekar, A., Zhu, Y., Garg, A., Booher, J., Spero, M., Tung, A., Gao, J., Emmons, J., Gupta, A., Orbay, E., Savarese, S., & Fei-Fei, L. (2018). ROBOTURK: A crowdsourcing platform for robotic skill learning through imitation. Proceedings of the 2nd Conference on Robot Learning, 879–893. https://proceedings.mlr.press/v87/mandlekar18a.html

Miller, T. (2019). Explanation in artificial intelligence: Insights from the social sciences. Artificial Intelligence, 267, 1–38. https://doi.org/10.1016/j.artint.2018.07.007

Mioch, T., Peeters, M. M. M., & Neerincx, M. A. (2018). Improving adaptive human-robot cooperation through work agreements. 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), 1105–1110. https://doi.org/10.1109/ROMAN.2018.8525776

Olivier, M., Rey, S., Voilmy, D., Ganascia, J.-G., & Lan Hing Ting, K. (2022). Combining cultural probes and interviews with caregivers to co-design a social mobile robotic solution. IRBM. https://doi.org/10.1016/j.irbm.2022.06.004

Perugia, G., Guidi, S., Bicchi, M., & Parlangeli, O. (2022). The shape of our bias: Perceived age and gender in the humanoid robots of the ABOT database. Proceedings of the 2022 ACM/IEEE International Conference on Human-Robot Interaction, 110–119. https://dl.acm.org/doi/10.5555/3523760.3523779

Rifinski, D., Erel, H., Feiner, A., Hoffman, G., & Zuckerman, O. (2021). Human-human-robot interaction: Robotic object’s responsive gestures improve interpersonal evaluation in human interaction. Human–Computer Interaction, 36(4), 333–359. https://doi.org/10.1080/07370024.2020.1719839

Robertson, J. (2010). Gendering humanoid robots: Robo-sexism in Japan. Body & Society, 16(2), 1–36. https://doi.org/10.1177/1357034X10364767

Seaborn, K., & Frank, A. (2022). What pronouns for Pepper? A critical review of gender/ing in research. Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, 1–15. https://doi.org/10.1145/3491102.3501996

Singh, M. P. (1999). An ontology for commitments in multiagent systems. Artificial Intelligence and Law, 7(1), 97–113. https://doi.org/10.1023/A:1008319631231

Tay, B., Jung, Y., & Park, T. (2014). When stereotypes meet robots: The double-edge sword of robot gender and personality in human–robot interaction. Computers in Human Behavior, 38, 75–84. https://doi.org/10.1016/j.chb.2014.05.014

Tennent, H., Shen, S., & Jung, M. (2019). Micbot: A peripheral robotic object to shape conversational dynamics and team performance. 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), 133–142. https://doi.org/10.1109/HRI.2019.8673013

Thellman, S., de Graaf, M., & Ziemke, T. (2022). Mental state attribution to robots: A systematic review of conceptions, methods, and findings. ACM Transactions on Human-Robot Interaction (THRI), 11(4), 1-51. https://doi.org/10.1145/3526112

Wang, T., Zhao, J., Yatskar, M., Chang, K.-W., & Ordonez, V. (2019). Balanced datasets are not enough: Estimating and mitigating gender bias in deep image representations. 5310–5319. https://openaccess.thecvf.com/content_ICCV_2019/html/Wang_Balanced_Datasets_Are_Not_Enough_Estimating_and_Mitigating

_Gender_Bias_ICCV_2019_paper.html

Zhang, B. H., Lemoine, B., & Mitchell, M. (2018). Mitigating unwanted biases with adversarial learning. Proceedings of the 2018 AAAI/ACM Conference on AI, Ethics, and Society, 335–340. https://doi.org/10.1145/3278721.3278779

Domestic robots have the potential to improve quality of life through performing household tasks as well as providing personal assistance and care. To be successful, domestic robots need to be able to work in households with different physical environments as well as user types, values, and power relations.

Gendered Innovations:

1. Understanding the Needs and Preferences of Diverse Households

2. Value Alignment between Robot and Household

3. Overcoming Domain Gaps between Training and Deployment Environments

4.Addressing Domestic and Global Power Dynamics