Sex, Gender, & Intersectional Analysis

Case Studies

- Science

- Health & Medicine

- Chronic Pain

- Colorectal Cancer

- Covid-19

- De-Gendering the Knee

- Dietary Assessment Method

- Gendered-Related Variables

- Heart Disease in Diverse Populations

- Medical Technology

- Nanomedicine

- Nanotechnology-Based Screening for HPV

- Nutrigenomics

- Osteoporosis Research in Men

- Prescription Drugs

- Systems Biology

- Engineering

- Assistive Technologies for the Elderly

- Domestic Robots

- Extended Virtual Reality

- Facial Recognition

- Gendering Social Robots

- Haptic Technology

- HIV Microbicides

- Inclusive Crash Test Dummies

- Human Thorax Model

- Machine Learning

- Machine Translation

- Making Machines Talk

- Video Games

- Virtual Assistants and Chatbots

- Environment

Gendering Social Robots: Analyzing Gender and Intersectionality

The Challenge

Humans tend to treat robots like humans, projecting human characteristics, such as personality and intentionality, onto machines. Humans also project gender onto robots, including expectations about how "male" and "female" entities should act.

Method: Analyzing Gender and Intersectionality in Social Robots

Robots are designed in a world alive with gender norms, gender identities, and gender relations. Humans—whether as designers or users—tend to gender machines (because, in human cultures, gender is a primary social category). Should this gendering be promoted? Does gendering robots enhance acceptance by humans? Does it enhance performance when humans and robots collaborate? Or should the gendering of robots be resisted? Does it reinforce gender stereotypes that amplify social inequalities? The danger is that designing hardware toward current stereotypes can reinforce those stereotypes. The challenge for designers is to understand how gender becomes embodied in robots in order to design robots in ways that promote social equality.

Gendered Innovations:

1. Understanding How Gender is Embodied in Robots

2. Designing Robots to Promote Social Equality

Gendered Innovation 1: Understanding How Gender is Embodied in Robots

Gendered Innovation 2: Designing Robots to Promote Social Equality

Method: Formulating Research Questions

Conclusions

Next Steps

The Challenge

Until recently, robots were largely confined to factories. Most people never see or interact with these robots, and they do not look, sound, or behave like humans. But engineers are increasingly designing robots to interact with humans as service robots in hospitals, elder care facilities, classrooms, people’s homes, airports, and hotels—which will be the focus of this case study. Robots are also being developed for warfare, policing, bomb defusing, security, and the sex industry—topics we do not treat.

Women, men, and gender-diverse individuals may have different needs or social preferences, and designers should aim for gender-inclusive design (Wang, 2014). Gender-inclusive design is not “gender-blind” or “gender-stereotypical,” but design that considers the unique needs of distinct social groups.

Robot designers and specialists in social human-robot interaction (sHRI) argue that our tendency to project human social cues—including gender—onto artificial agents may help users engage more effectively with robots (Simmons et al., 2011; Makatchev et al., 2013; Jung et al., 2016). As soon as users assign gender to a machine, however, stereotypes follow. Designers of robots and artificial intelligence do not simply create products that reflect our world, they also (perhaps unintentionally) reinforce and validate certain gender norms (i.e., social attitudes and behaviors) considered appropriate for women, men, or gender-diverse people.

How do humans “gender” social robots? Do robots provide new opportunities to create more equitable gender norms? How can we best design socially-responsible robots?

Gendered Innovation 1: Understanding How Gender is Embodied in Robots

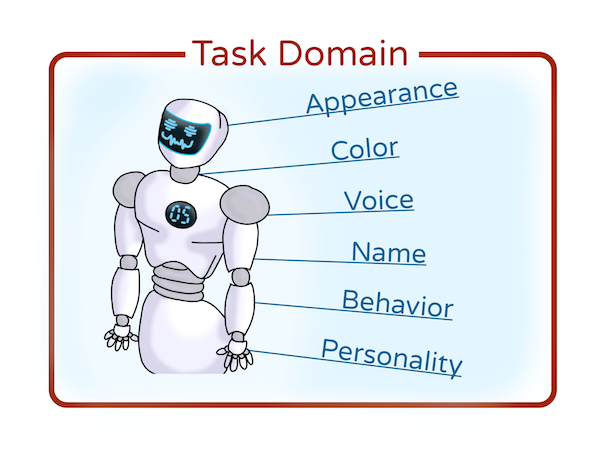

Gender cues may be embodied in social robots in multiple ways (Kittmann et al., 2015). Even a single, minimalist gender cue can trigger a gender interpretation along with normative expectations of behavior. The figure below suggests aspects of robot design that may embed gender cues—click to find out how.

Many task domains in human society are gender specific, i.e., dominated by women vs. men. Domestic labor or healthcare, for example, are considered female-specific domains where a “female” robot may perform best because it matches human expectations. Security or math tutoring, by contrast, are considered male-specific, and users may prefer “male” robots.

Gendered Innovation 2: Can Robots be designed to Promote Social Equality?

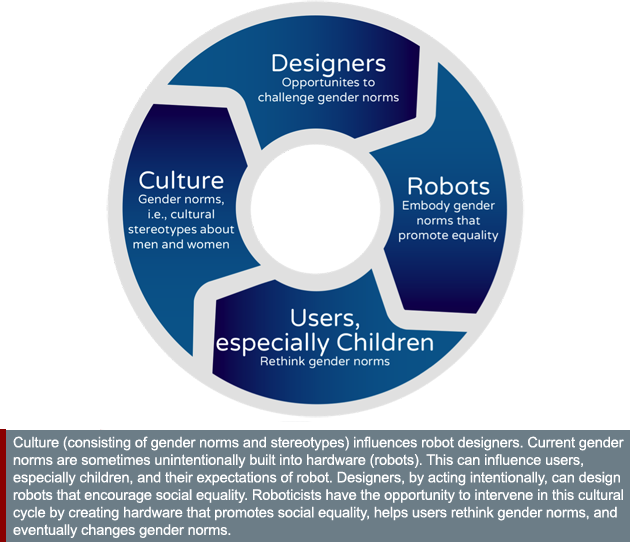

Robot designers have the opportunity to challenge gender stereotypes in ways that can lead users to rethink gender norms (see diagram).

Creating a Virtuous Circle: Robotics as a Catalyst for Social Equality

Method: Formulating Research Questions

How can robots be designed to simultaneously ensure high user uptake and to promote social equality? We see at least six options:

1. Challenge current gender stereotypes

2. Design customizable robots, where users choose features

3. Design “genderless” robots

4. Design gender-fluid robots, prioritizing gender equality

5. Step out of human social relations

6. Design “robot-specific” identities that bypass social stereotypes

Roboticists have numerous options for considering gender in robots.

1. Challenge current gender stereotypesIt is well established that humans interact with robots in ways that are comparable to human/human interaction. Building stereotypes into hardware hardens stereotypes in ways that may perpetuate current social inequalities (Eyssel & Hegel, 2012). Designing gender stereotypes into robots may: 1) amplify inequalities; 2) be offensive to humans; 3) result in market failures.

NASA’s superhero Valkyrie rescue robot challenges gender stereotypes. Although NASA officially claims Valkyrie is gender-neutral, its designer intentionally built a female super hero (1.87m, 129kg) to inspire his then 7-year-old daughter. The robot comes complete with breasts and a female name (Valkyrie refers to goddess-like figures in Norse mythology). To get the form right, the team brought in a French physicist-turned–fashion designer to help with the design (Dattaro, 2015). Although some characteristics appear masculine, such as the visual cues to Iron Man, a female in this space is interesting.

NASA’s superhero Valkyrie rescue robot challenges gender stereotypes. Although NASA officially claims Valkyrie is gender-neutral, its designer intentionally built a female super hero (1.87m, 129kg) to inspire his then 7-year-old daughter. The robot comes complete with breasts and a female name (Valkyrie refers to goddess-like figures in Norse mythology). To get the form right, the team brought in a French physicist-turned–fashion designer to help with the design (Dattaro, 2015). Although some characteristics appear masculine, such as the visual cues to Iron Man, a female in this space is interesting.

Gender cues can be subtle. SoftBank refers to its robot, Pepper, as “he,” although the cinched waist and skirt-like legs suggest a female. SoftBank advertises Pepper as the “first humanoid robot capable of recognising the principal human emotions and adapting his behavior to the mood of his interlocutor.” Emotion is often considered a female-specific domain; in this instance, the robot creators have countered cultural stereotypes by gendering Pepper male.

Gender cues can be subtle. SoftBank refers to its robot, Pepper, as “he,” although the cinched waist and skirt-like legs suggest a female. SoftBank advertises Pepper as the “first humanoid robot capable of recognising the principal human emotions and adapting his behavior to the mood of his interlocutor.” Emotion is often considered a female-specific domain; in this instance, the robot creators have countered cultural stereotypes by gendering Pepper male.

2. Design customizable robots

When designers decide to create human-like robots, one option is to allow users to customize the gender cues. The designers of x.ai, a scheduling bot, offer consumers a choice of names: Amy or Andrew. Although the names are gendered, the voice is the same and is said to “defy gender stereotypes.” As stated by the company, “the goal is to offer people a choice of genders for agent name but to make sure all of phrasings are gender neutral, mostly by sticking to facts such as time, place, and location without chit-chat” (Coren, 2017). The names Amy and Andrew are, of course, ethnically white and English. Consumers aren’t given the choice between “Imani” and “Jamal,” for instance (Sparrow, 2020). Further, gender-fluid people will want choices that go beyond gender binaries.

Savioke’s Relay service robots is interesting in this regard. Out-of-the box, it’s gender neutral, with a pink-blending-to-blue display. The robot, which does not speak, is said by its creators to be “charming, polite, and honest” and labeled a “Botlr”—playing off butler. The top opens to deliver to hotel guests towels, newspapers, and other service items. Savioke designers call it “he,” but this seems an unconscious default, and the robot might as easily be called “it.”

Savioke’s Relay service robots is interesting in this regard. Out-of-the box, it’s gender neutral, with a pink-blending-to-blue display. The robot, which does not speak, is said by its creators to be “charming, polite, and honest” and labeled a “Botlr”—playing off butler. The top opens to deliver to hotel guests towels, newspapers, and other service items. Savioke designers call it “he,” but this seems an unconscious default, and the robot might as easily be called “it.”

Relay is also customizable. With a black bowtie (male) but yellow and pink strips, the robot becomes nicely gender ambiguous.

Relay is also customizable. With a black bowtie (male) but yellow and pink strips, the robot becomes nicely gender ambiguous.

With the same bowtie in turquoise, a pink jacket, and female name, it becomes “Jena.” The color scheme echoes themes of the Singapore Hotel Jen where the robot is employed.

With the same bowtie in turquoise, a pink jacket, and female name, it becomes “Jena.” The color scheme echoes themes of the Singapore Hotel Jen where the robot is employed.

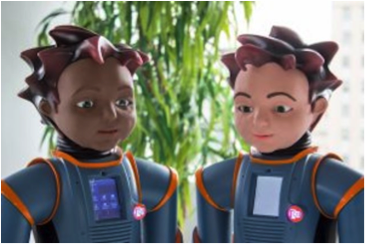

Robots can also be customized around ethnicity and gender. Robokind designs robots for learners with autism spectrum disorder (ASD). For many years Robokind offered only boy-like robots in a variety of skin tones, Carver (left) and Milo (right). Because autism affects four times as many boys as girls this robot was, perhaps rightly, boy-like. But millions of girls also suffer from ASD. What is interesting is that Robokind recently introduced girl-like robots, Jemi (right) and Veda (left), to offer girls useful educational tools.

Robots can also be customized around ethnicity and gender. Robokind designs robots for learners with autism spectrum disorder (ASD). For many years Robokind offered only boy-like robots in a variety of skin tones, Carver (left) and Milo (right). Because autism affects four times as many boys as girls this robot was, perhaps rightly, boy-like. But millions of girls also suffer from ASD. What is interesting is that Robokind recently introduced girl-like robots, Jemi (right) and Veda (left), to offer girls useful educational tools.

3. Design genderless robots

Roboticists may opt to design genderless robots. Matlda is a genderless robot (despite the female name) used in Australian retirement homes and in some special education classrooms. This assistive robot has the appearance of a baby and a childish voice. Baby-faced robots—i.e., robots with large eyes and small chins—are perceived to be naïve and non-threatening (Powers & Kiesler, 2006). A recent study found that users with dementia engaged with and enjoyed the baby-like Matlda. Eighty-three percent of participants said they liked contact with Matlda and 72 percent said they felt relaxed in its presence (Khosla et al., 2013; Tam & Khosla, 2016).

Roboticists may opt to design genderless robots. Matlda is a genderless robot (despite the female name) used in Australian retirement homes and in some special education classrooms. This assistive robot has the appearance of a baby and a childish voice. Baby-faced robots—i.e., robots with large eyes and small chins—are perceived to be naïve and non-threatening (Powers & Kiesler, 2006). A recent study found that users with dementia engaged with and enjoyed the baby-like Matlda. Eighty-three percent of participants said they liked contact with Matlda and 72 percent said they felt relaxed in its presence (Khosla et al., 2013; Tam & Khosla, 2016).

Softbank’s NAO is another example of a child-like, genderless robot (Obaid et al., 2016). Although the designers refer to NAO as “he,” human annotators judged NAO genderless (Otterbacher & Talias, 2017). Robot appearance is one feature; voice is another. NAO’s default voice (Operating System version1.x) is a synthetic male child’s voice, known as Kenny (Sandygulova & O’Hare, 2015). The voice can also be manipulated to higher frequencies to suggest a female child.

Softbank’s NAO is another example of a child-like, genderless robot (Obaid et al., 2016). Although the designers refer to NAO as “he,” human annotators judged NAO genderless (Otterbacher & Talias, 2017). Robot appearance is one feature; voice is another. NAO’s default voice (Operating System version1.x) is a synthetic male child’s voice, known as Kenny (Sandygulova & O’Hare, 2015). The voice can also be manipulated to higher frequencies to suggest a female child.

Honda’s ASIMO (Advanced Step in Innovative MObility) has no obvious gender cues and speaks in childish voice. The problem is that people may assume robots have a gender identity, even when roboticists have designed them to be neutral. Asimo can easily be seen as male for its form, shape, and behavior.

4. Design gender-fluid robots prioritizing gender equality

To our knowledge, roboticists have not experimented with gender fluidity.

5. Step out of human social relations

5. Step out of human social relations

To avoid human stereotypes, some robot designers have stepped out of human relations altogether. The Japanese creators of the RIBA-II, a large care-giving robot designed to lift sick or elderly people, chose to depict their robot as a large teddy bear rather than a human—thus removing the machine from human gender relations.

6. Design “robot-specific” identities that bypass social stereotypes

6. Design “robot-specific” identities that bypass social stereotypes

Fraunhofer and Phoenix Design’s Care-O-Bot 4 is of interest because it was designed to be neither human nor machine, but something called “technomorphic” and “iconic” (Kittmann et al., 2015). The team, which included product designers, robotics and software engineers, UX designers in a human-centered design process, avoided human features, including gender, in order to avoid raising unrealistic expectation of the robot’s capacities among users. The iconic design, they state, is open to interpretation: “A person who prefers a male robot, might recognize it in the design, a person who prefers a female robot might perceive it as well in the same design” (Parlitz et al., 2008).

The designers sought to create an “attractive,” “approachable” robot (Kittmann et al., 2015). It conveys the emotions required in care settings through gestures (primarily with its eyes embodied on a screen, a cocked head, etc.). The “head” is a touch screen equipped with cameras and sensors to “see” user’s gestures in addition to facial-recognition algorithms to estimate a user’s gender, age, and emotion.

Although the designers call the Care-O-Bot a “gentleman,” it functions largely outside gender norms. “Care-O-Bot” is a gender-neutral name. Users can personalize the name and choose male or female voices in forty different languages.

Conclusions

We propose a Hippocratic oath for roboticists: to help or, at least, do no harm. The danger is that unconsciously designing robots toward current gender stereotypes may reinforce those stereotypes in ways roboticists did not intend. Roboticists have an opportunity to intervene in the human world. It is well established that technology has an impact on human culture Video games, for example, influence players’ real-world behaviors. Controlled experiments show that violent game play (in first-person shooter games, such as Wolfenstein 3D, or third-person fighter games, such as Mortal Kombat) increases the incidence of self-reported aggressive thoughts in the short term (Anderson et al., 2007). Research has also shown that prosocial games in which the goal is “to benefit another game character” can make gamers more likely to take prosocial action, defined as voluntary actions intended to help others (Greitemeyer & Osswald, 2010). Robots can similarly have an impact on human gender norms. Robots can either reproduce gender stereotypes or challenge them—in ways that lead users to rethinking gender norms. As roboticists better understand how gender is embodied in robots, they can design robots in ways that are safe, effective, and promote social equality.

Next Steps

We propose that researchers design controlled experiments to determine how perceived robot gender shapes human gender norms. Does robot gender promote or hinder gender equality? Researchers have experimented with immersive virtual reality and race. With virtual reality, people can experience having a body of a different race. Such embodiment of a European-American in an African-American body can reduce implicit racial bias (Banakou et al., 2016; Hassler et al., 2017, but see Lai et al., 2016). We propose analogous experiments to test how perceived robot gender impacts human attitudes toward gender equality.

Works Cited

Anderson, C., Buckley, K., & Gentile, D. (2007). Violent Video Game Effects on Children and Adolescents: Theory, Research, and Public Policy. New York: Oxford University Press.

Andrist, S., Ziadee, M., Boukaram, H., Mutlu, B., & Sakr, M. (2015, March). Effects of culture on the credibility of robot speech: A comparison between english and arabic. In Proceedings of the Tenth Annual ACM/IEEE International Conference on Human-Robot Interaction, 157-164. ACM.

Atkinson, M. (1984). Our Masters' Voices: The Language and Body-Language of Politics. London: Methuen.

Banakou, D., Hanumanthu, P. D., & Slater, M. (2016). Virtual embodiment of white people in a black virtual body leads to a sustained reduction in their implicit racial bias. Frontiers in human neuroscience, 10, 601.

Carpenter, J., Davis, J. M., Erwin-Stewart, N., Lee, T. R., Bransford, J. D., & Vye, N. (2009). Gender representation and humanoid robots designed for domestic use. International Journal of Social Robotics, 1(3), 261–265. https://doi.org/10.1007/s12369-009-0016-4

Coren, M. J. (2017, July 26). It took (only) six years for bots to start ditching outdated gender stereotypes. Quartz. Retrieved from https://qz.com/1033587/it-took-only-six-years-for-bots-to-start-ditching-outdated-gender-stereotypes/

Dattaro, L. (2015, Feb 4). Bot looks like a lady: Should robots have gender? Slate. http://www.slate.com/articles/technology/future_tense/2015/02/robot_gender_is_it_bad_for_human_women.

Eyssel, F., & Hegel, F. (2012). (S)he’s got the look: Gender stereotyping of robots. Journal of Applied Social Psychology, 42(9), 2213–2230. https://doi.org/10.1111/j.1559-1816.2012.00937.

Eyssel, F., & Kuchenbrandt, D. (2012). Social categorization of social robots: Anthropomorphism as a function of robot group membership. British Journal of Social Psychology, 51(4), 724-731.

Goetz, J., Kiesler, S., & Powers, A. (2003). Matching robot appearance and behavior to tasks to improve human-robot cooperation. In Proceedings of Robot and Human Interactive Communication (pp. 55–60). IEEE. https://doi.org/10.1109/ROMAN.2003.1251796

Greitemeyer, T., & Osswald, S. (2010). Effects of prosocial video games on prosocial behavior. Journal of Personality and Social Psychology, 98 (2), 211-221.

Hasler, B. S., Spanlang, B., & Slater, M. (2017). Virtual race transformation reverses racial in-group bias. PloS one, 12(4), e0174965.

Jung, E. H., Waddell, T. F., & Sundar, S. S. (2016). Feminizing robots: User responses to gender cues on robot body and screen. In Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems (pp. 3107–3113). San Jose, CA.

Khosla, R., Chu, M.-T., & Nguyen, K. (2013). Affective robot enabled capacity and quality improvement of nursing home aged care services in Australia. Presented at the Computer Software and Applications Conference Workshops, Japan: https://doi.org/10.1109/COMPSACW.2013.89

Kittmann, R., Fröhlich, T., Schäfer, J., Reiser, U., Weißhardt, F., & Haug, A. (2015). Let me introduce myself: I am Care-O-bot 4, a gentleman robot. Mensch und computer 2015–proceedings.

Knight, W. (2017, August 16). AI programs are learning to exclude some African-American voices. MIT Technology Review.

Lai, C. K., Skinner, A. L., Cooley, E., Murrar, S., Brauer, M., Devos, T., ... & Simon, S. (2016). Reducing implicit racial preferences: II. Intervention effectiveness across time. Journal of Experimental Psychology: General, 145(8), 1001.

Li, D., Rau, P. P., & Li, Y. (2010). A cross-cultural study: Effect of robot appearance and task. International Journal of Social Robotics, 2(2), 175-186.

Makatchev, M., Simmons, R., Sakr, M., & Ziadee, M. (2013). Expressing ethnicity through behaviors of a robot character. In Proceedings of the 8th ACM/IEEE international conference on Human-robot interaction (pp. 357-364). IEEE Press. Nass, C., & Moon, Y. (2000). Machines and mindlessness: Social responses to computers. Journal of Social Issues, 56(1), 81–103.

Nass, C., & Moon, Y. (2000). Machines and mindlessness: Social responses to computers. Journal of Social Issues, 56(1), 81–103. https://doi.org/10.1111/0022-4537.00153

Nass, C., Moon, Y., & Green, N. (1997). Are machines gender neutral? Gender‐stereotypic responses to computers with voices. Journal of applied social psychology, 27(10), 864-876.

Obaid, M., Sandoval, E. B., Złotowski, J., Moltchanova, E., Basedow, C. A., & Bartneck, C. (2016, August). Stop! That is close enough. How body postures influence human-robot proximity. In Robot and Human Interactive Communication (RO-MAN), 2016 25th IEEE International Symposium on (pp. 354-361). IEEE.

Otterbacher, J., & Talias, M. (2017, March). S/he's too warm/agentic!: The influence of gender on uncanny reactions to robots. In Proceedings of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, 214-223).

Parlitz, C., Hägele, M., Klein, P., Seifert, J., & Dautenhahn, K. (2008). Care-o-bot 3-rationale for human-robot interaction design. In Proceedings of 39th International Symposium on Robotics (ISR), Seoul, Korea, 275-280.

Powers, A., & Kiesler, S. (2006, March). The advisor robot: Tracing people's mental model from a robot's physical attributes. In Proceedings of the 1st ACM SIGCHI/SIGART conference on Human-robot interaction (pp. 218-225). ACM.

Rea, D. J., Wang, Y., & Young, J. E. (2015, October). Check your stereotypes at the door: An analysis of gender typecasts in social human-robot interaction. In International Conference on Social Robotics, 554-563.

Reich-Stiebert, N., & Eyssel, F. (2017, March). (Ir) relevance of gender?: On the influence of gender stereotypes on learning with a robot. In Proceedings of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, 166-176.

Sandygulova, A., & O’Hare, G. M. (2015). Children’s perception of synthesized voice: Robot’s gender, age and accent. In International Conference on Social Robotics, 594-602.

Sparrow, R. (2020). Robotics has a race problem. Science, Technology, & Human Values, 45(3), 538-560.

Tam, L., & Khosla, R. (2016). Using social robots in health settings: Implications of personalization for human-machine communication. Communication+ 1, 5.

Wang, Y., & Young, J. E. (2014, May). Beyond pink and blue: Gendered attitudes towards robots in society. In Proceedings of Gender and IT Appropriation. Science and Practice on Dialogue-Forum for Interdisciplinary Exchange. European Society for Socially Embedded Technologies.

Wang, Z., Huang, J., & Fiammetta, C. (2021). Analysis of Gender Stereotypes for the Design of Service Robots: Case Study on the Chinese Catering Market. In Designing Interactive Systems Conference 2021, 1336-1344.

Robots are designed in a world alive with gender norms, gender identities, and gender relations. Humans—whether as designers or users—tend to gender machines (because, in human cultures, gender is a primary social category).

The danger is that designing hardware toward current stereotypes can reinforce those stereotypes. As soon as users assign gender to a machine stereotypes follow. Designers of robots and artificial intelligence do not simply create products that reflect our world, they also (perhaps unintentionally) reinforce and validate certain gender norms (i.e., social attitudes and behaviors) considered appropriate for women, men, or gender-diverse people. The challenge for designers is to understand how gender becomes embodied in robots in order to design robots in ways that promote social equality.

Gendered Innovations:

1. Understanding how gender is embodied in robots

2. Designing robots to promote social equality

This case study investigates: How do humans "gender" social robots? Do robots provide new opportunities to create more equitable gender norms? How can we best design socially-responsible robots?