Sex & Gender Analysis

Case Studies

- Science

- Health & Medicine

- Chronic Pain

- Colorectal Cancer

- Covid-19

- De-Gendering the Knee

- Dietary Assessment Method

- Gendered-Related Variables

- Heart Disease in Diverse Populations

- Medical Technology

- Nanomedicine

- Nanotechnology-Based Screening for HPV

- Nutrigenomics

- Osteoporosis Research in Men

- Prescription Drugs

- Systems Biology

- Engineering

- Assistive Technologies for the Elderly

- Domestic Robots

- Extended Virtual Reality

- Facial Recognition

- Gendering Social Robots

- Haptic Technology

- HIV Microbicides

- Inclusive Crash Test Dummies

- Human Thorax Model

- Machine Learning

- Machine Translation

- Making Machines Talk

- Video Games

- Virtual Assistants and Chatbots

- Environment

Facial Recognition: Analyzing Gender and Intersectionality in Machine Learning

Facial recognition systems (FRSs) can identify people in crowds, analyze emotion, and detect gender, age, race, sexual orientation, facial characteristics, etc. These systems are often employed in recruitment, authorizing payments, security, surveillance and unlocking phones. Despite efforts by academic and industrial researchers to improve reliability and robustness, recent studies demonstrate that these systems can discriminate based on characteristics such as race and gender, and their intersections (Buolamwini & Gebru, 2018).

Method: Analyzing Gender and Intersectionality in Machine Learning

Bias in machine learning (ML) is multifaceted and can result from data collection, or from data preparation and model selection. For example, a dataset populated with men and lighter-skinned individuals will misidentify darker-skinned females more often. This is an example of intersectional bias, in which different types of discrimination amplify negative effects on an individual or group.

Gendered Innovations:

- Understanding Discrimination in Facial Recognition. Each step in FRS—from face detection to facial attribute classification, face verification, and facial identification—should be checked for bias. To work, models must be trained on datasets that represent the target population. Models trained on mature adults, for example, will not perform well on young people (Howard et al. 2017).

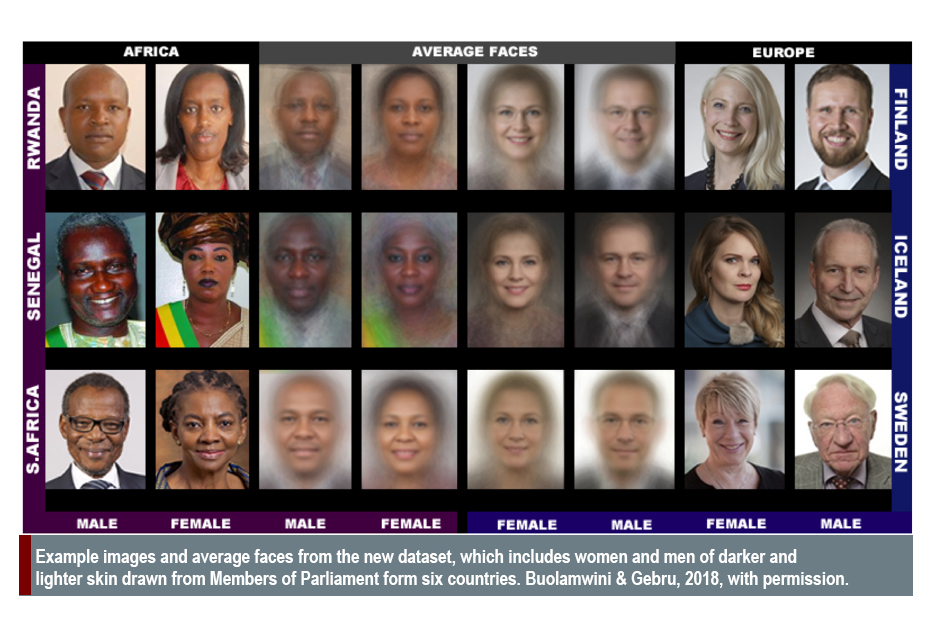

- Creating Intersectional Training Datasets. To work properly in tasks such as validating the identity of people crossing international borders, training datasets may need to include intersectional characteristics, such as gender and race. The Gender Shades project, based at MIT, developed and validated such a dataset for four categories: darker-skinned women, darker-skinned men, lighter-skinned women and lighter-skinned men (Buolamwini & Gebru, 2018).

- Establishing Parameters for a Diverse Set of Faces. Wearing facial cosmetics can reduce the accuracy of facial recognition systems by 76% (Dantcheva et al., 2012; Chen et al., 2015). Transgender people, especially during transition, may be identified incorrectly (Keyes, 2018). Publicly available face databases need to establish parameters to improve accuracy across diverse categories (Eckert et al., 2013) without endangering those communities (Keyes, 2018).

The Challenge

Facial recognition systems (FRSs) can identify people in crowds, analyse emotion, and detect gender, age, race, sexual orientation, facial characteristics, etc. These systems are employed in many areas, e.g. hiring, authorizing payments, security, surveillance, and unlocking phones. Despite efforts by both academic and industrial researchers to improve reliability and robustness, recent studies demonstrate that these systems can discriminate based on characteristics such as race and gender and their intersections (Buolamwini & Gebru, 2018). In response, national governments, companies, and academic researchers are debating the ethics and legality of facial recognition. One point is to enhance the accuracy and fairness of the technology itself; another is to evaluate its use and regulate deployment through carefully implemented policies.

Method: Analyzing Gender and Intersectionality in Machine Learning

Bias in machine learning (ML) is multi-faceted and can result from data collection, or from data preparation and model selection. In the first case, the data may contain human bias. For example, a dataset populated with men and lighter-skinned individuals will misidentify darker-skinned females at higher rates. This is an example of intersectional bias, where different types of discrimination amplify negative effects for an individual or group. Intersectionality refers to intersecting categories such as ethnicity, age, socioeconomic status, sexual orientation and geographic location that impact individual or group identities, experiences and opportunities.Bias may also be introduced during data preparation and model selection, which involves selecting attributes that the algorithm should consider or ignore, such as facial cosmetics or the faces of transgender individuals during transition.

Gendered Innovation 1: Understanding Discrimination in Facial Recognition

To understand discrimination in FRSs, it is important to understand the difference between face detection, facial attribute classification, facial recognition, face verification and facial identification.

Face detection can occur without facial recognition; however, facial recognition does not work without face detection. Face detection differentiates a human face from other objects in an image or video. Face detection systems have repeatedly been shown to fail for darker-skinned individuals (Buolamwini, 2017). For example, early versions of the statistical image-labelling algorithm used by Google Photos classified darker-skinned people as gorillas (Garcia, 2016). If this first step fails, FRSs fail.

Facial attribute classification labels facial attributes such as gender, age, ethnicity, the presence of a beard or a hat, or even emotions. For emotions, a review of online recognition APIs showed that over 50% of the systems used facial expressions to define emotions (Doerrfeld, 2015). To work, these models must be trained on datasets that are representative of the target population. Models trained on mature adults, for example, will not perform well on young people (Howard et al., 2017).

Facial recognition identifies a unique individual by comparing an image of that person to their known facial contours. One type of facial recognition is verification, often used to unlock a phone or to validate the identity of someone crossing an international border (see the EU-funded H2020 project, iBorderCtrl). These solutions are designed to decrease processing time. If, however, the FRSs are not trained sufficiently, for example on darker-skinned women (Buolamwini & Gebru, 2018) or transgender faces (Keyes, 2018), the technologies will fail for them—see below. The other type of facial recognition is facial identification, which matches the face of a person of interest to a database of faces. This technology is often used for missing persons or criminal cases.

Gendered Innovation 2: Creating Intersectional Training Datasets

The accuracy of FRSs is determined by the set of images or videos collected for testing. For the FRSs to perform well, training data must be sufficiently broad and diverse to enable the predictive model to accurately identify faces in a variety of contexts.

Zhao et al. (2017) found that when photographs depict a man in a kitchen, automated image captioning algorithms systematically misidentify the individual as a woman, in part because training sets portray women in cooking contexts 33% more frequently than men. The trained model amplified this disparity from 33% to 68% during testing. It is crucial to get the training data right.

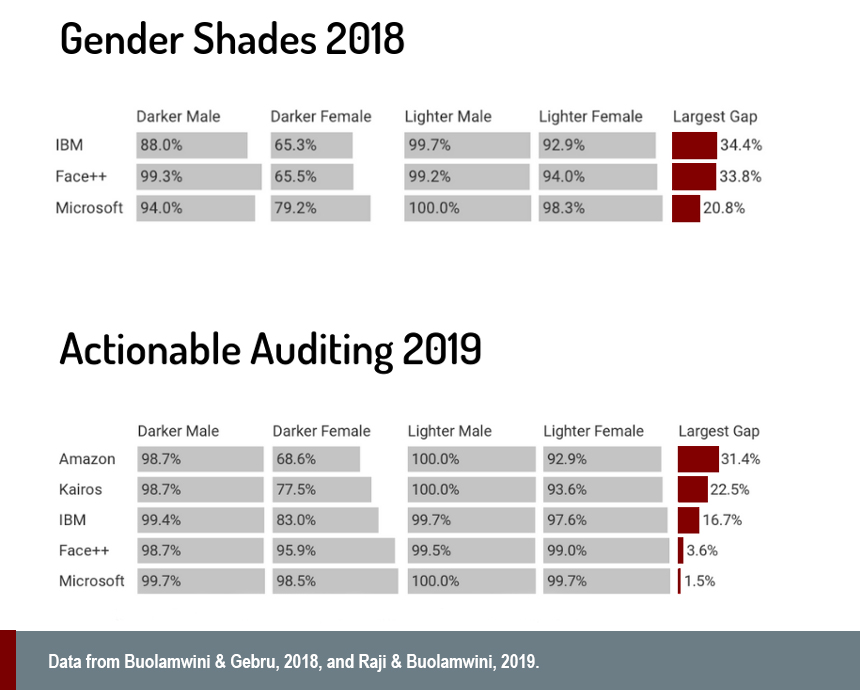

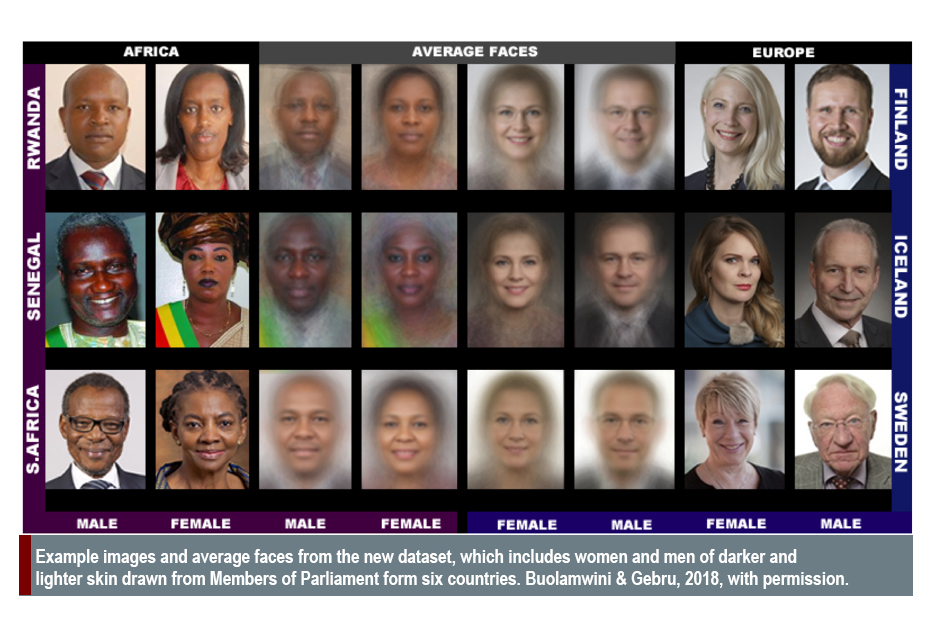

In the now well-known Gender Shades study, Buolamwini & Gebru (2018) measured the accuracy of commercial gender classification systems from Microsoft, IBM and Face++, and found that darker-skinned women were often misclassified. Systems performed better on men’s faces than on women’s faces, and all systems performed better on lighter-skin than darker-skin. Error rates were 35% for darker-skinned women, 12% for darker-skinned men, 7% for lighter-skinned women and less than 1% for lighter-skinned men.

To overcome these problems, the team developed and labelled an intersectional dataset to test gender and race classification performance on four subgroups: darker-skinned women, darker-skinned men, lighter-skinned women and lighter-skinned men. Since race and ethnicity labels are culturally specific, the team used skin shade to measure dataset diversity (Cook et al., 2019). Their dataset consisted of 1270 images from three African countries (Rwanda, Senegal and South Africa) and three European countries (Iceland, Finland and Sweden).

Accuracy in Intersectional Classification

IBM Research has recently released a large dataset called Diversity in Faces (DiF). Their goal is to advance the study of fairness and accuracy in FRSs (IBM, DiF).

Gendered Innovation 3: Establishing Parameters for a Diverse Set of Faces

Cosmetics

Wearing makeup has been a popular practice throughout history and across numerous cultures. Although men’s use of cosmetics is on the rise, women use makeup more. Loegel et al. (2017) report that women use makeup to give themselves an image that is compatible with cultural norms of femininity.

Facial cosmetics reduce the accuracy of both commercial and academic facial recognition methods by up to 76.21% (Dantcheva et al., 2012; Chen et al., 2015). One reason is that makeup has not been established as a parameter in publicly available face databases (Eckert et al., 2013). One proposal for developing FRSs that are robust for facial makeup is to map and correlate multiple images of the same person with and without makeup. These solutions also need to take into account different makeup practices across cultures (Guo & Yan, 2014).

Transgender

One of the emerging challenges in the field of FRSs is transgender faces, especially during transition periods. Keyes (2018) found that Automatic Gender Recognition (AGR) research ignores transgender people, with negative consequences. For instance, a transgender Uber driver was required to travel two hours to a local Uber office after a facial recognition security feature was unable to confirm their identity. The company uses Microsoft Azure’s Cognitive Services technology to reduce fraud by periodically prompting drivers to submit selfies to Uber before beginning a shift (Melendez, 2018).

Gender-affirming hormone therapy redistributes facial fat and changes the overall shape and texture of the face. Depending on the direction of transition (i.e., male to female or female to male), the most significant changes in the transformed face affect fine wrinkles and lines, stretch marks, skin thickening or thinning and texture variations. Androgen hormone therapy, for example, renders the face more angular.

Is the solution to correct bias by ensuring that plenty of trans people are included in training data? Debiasing might sound nice, but collecting data from a community that has reason to feel uncomfortable with data collection is not the best practice (Keyes, 2018). In this case, it may be important to revise algorithmic parameters. Existing methods indicate that the eye (or periocular) region can be used more reliably than the full face to perform recognition of gender-transformed images, as the periocular region is less affected by change than other facial regions (Mahalingam et al., 2014).

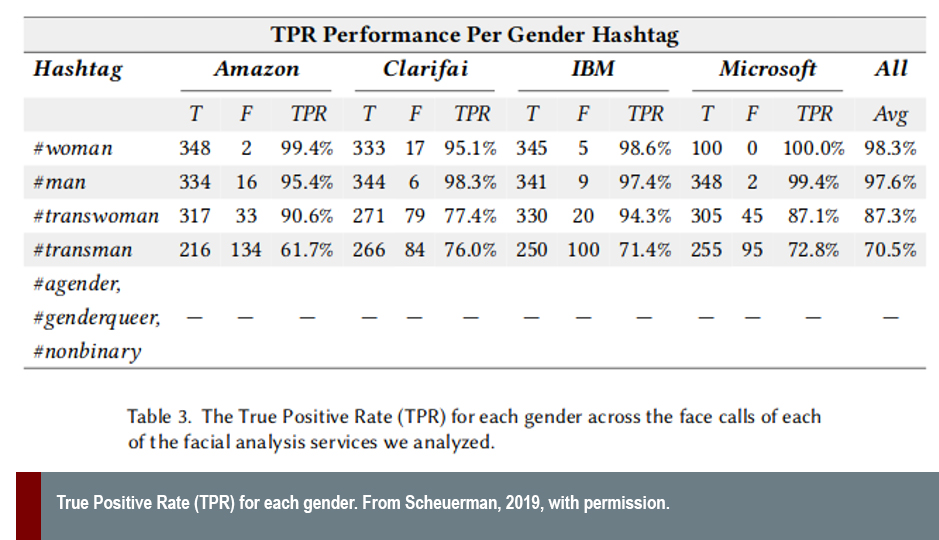

Scheuerman et al. (2019) found that four commercial services (Amazon Rekognition, Clarifai, IBM Watson Visual Recognition and Microsoft Azure) performed poorly on transgender individuals and were unable to classify non-binary genders. Using the gender hashtag provided by individuals in their Instagram posts (#woman, #man, #transwoman, #transman, #agender, #genderqueer, #nonbinary), the team calculated the accuracy of gender classification results across 2,450 images. On average, the systems categorized cisgender women (#woman) with 98.3% accuracy and cisgender men (#man) with 97.6% accuracy. Accuracy decreased for transgender individuals, averaging 87.3% for #transwoman and 70.5% for #transman. Those who identified as #agender, #genderqueer or #nonbinary were mischaracterized 100% of the time.

Emotion

The European Union-funded SMART project (Scalable Measures for Automated Recognition Technologies) sought to understand how smart surveillance can protect citizens against security threats while also protecting their privacy. The project presented a multi-modal emotion recognition system exploiting both audio and video information (Dobrišek et al., 2013). The experimental results show that their Gender Recognition (GR) subsystem increased overall emotion recognition accuracy from 77.4% to 81.5%.

Conclusions

FRSs can perpetuate and even amplify social patterns of injustice by consciously or subconsciously encoding human bias. Understanding underlying intersectional discrimination in society can help researchers develop more just and responsible technologies. Policy makers should ensure that biometric programs undergo thorough and transparent civil rights assessment prior to implementation.

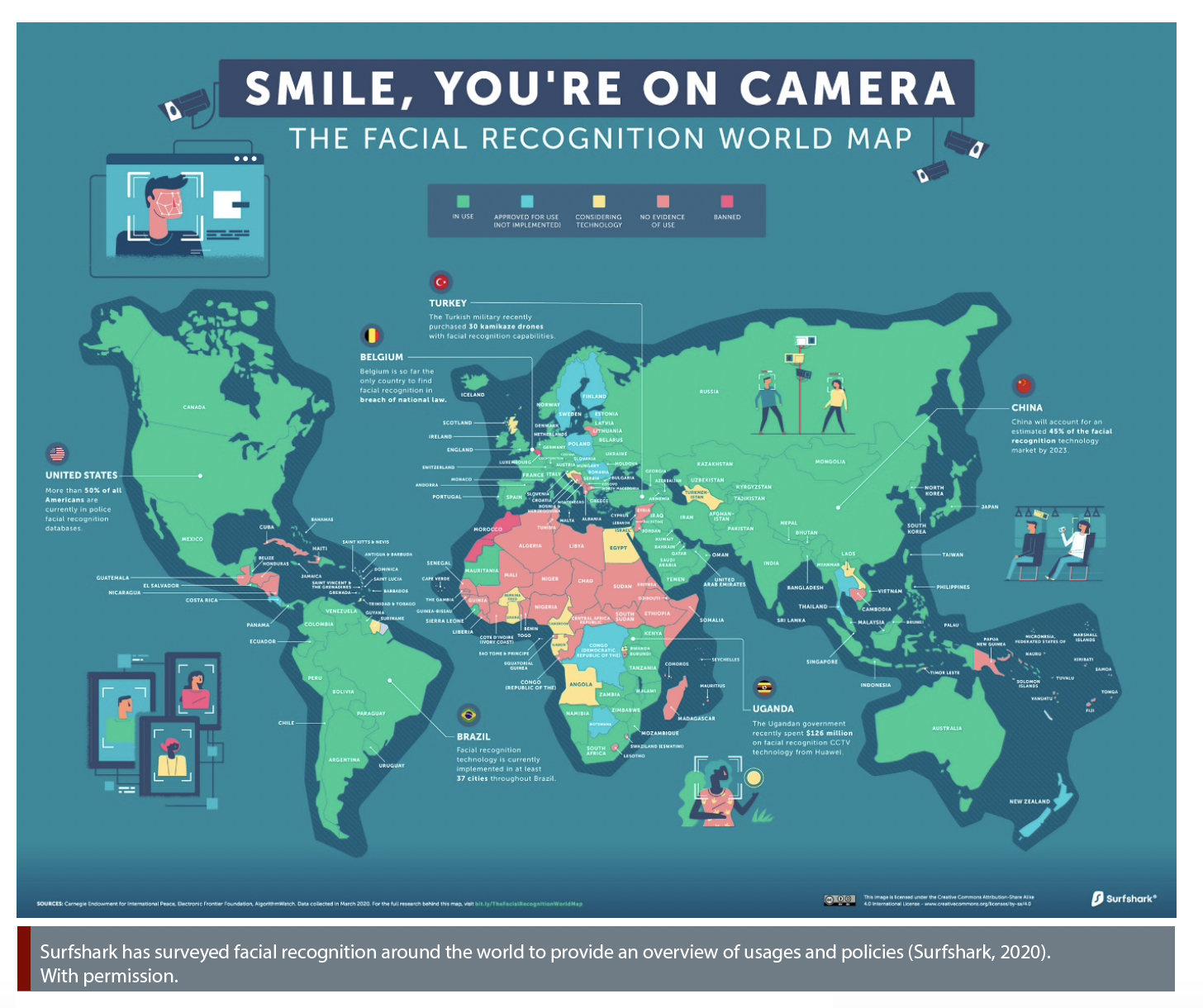

The potential misuse of facial recognition has led to several actions: Belgium has declared the use of facial recognition illegal, France and Sweden have expressly prohibited it in schools, and San Francisco, California has banned its use by local agencies, such as the transport authority or law enforcement. Companies, too, are pulling back: IBM has left the facial recognition business entirely, and Amazon has stopped police from using its facial recognition technology in response to worldwide protests against systemic racial injustices in 2020. Seeking long-term solutions, the US Algorithmic Justice League has called for the creation of a federal office similar to the US FDA to regulate facial recognition (Learned-Miller et al., 2020).

Next Steps

- Algorithms are only as good as the data they use. Training datasets should be sufficiently large and diverse to support FRSs across diverse populations and in diverse contexts.

- In the Face Recognition Vendor Test published by the US National Institute of Standards and Technology (NIST, 2019), the 1:N report (most relevant for FRSs used in bodycams) does not include a detailed breakdown of accuracy metrics for factors such as race, age and gender. This should be corrected.

- Develop fair, transparent and accountable facial analysis algorithms.

- Many of the studies and systems still adopt a binary view of gender. Research and innovation in this field must acknowledge the existence of a large number of gender-diverse individuals and create technologies that work across all of society.

Works Cited

Buolamwini, J., & Gebru, T. (2018). Gender shades: Intersectional accuracy disparities in commercial gender classification. Proceedings of Machine Learning Research, 81, 77-91. http://gendershades.org

Buolamwini, J. (2017). Algorithms aren’t racist. Your skin is just too dark. Hackernoon. https://hackernoon.com/algorithms-arent-racist-your-skin-is-just-too-dark-4ed31a7304b8

CASIA WebFace Vision Dataset. Retrieved September 4, 2019, from http://pgram.com/dataset/casia-webface

Chen, C., Dantcheva, A., & Ross, A. (2015). An ensemble of patch-based subspaces for makeup-robust face recognition. Information fusion, 32(B), 80-92. https://www.cse.msu.edu/~rossarun/pubs/ChenEnsembleFaceMakeup_INFFUS2016.pdf

Cook, C. M., Howard, J. J., Sirotin, Y. B., Tipton, J. L., & Vemury, A. R. (2019). Demographic effects in facial recognition and their dependence on image acquisition: an evaluation of eleven commercial systems. IEEE Transactions on Biometrics, Behavior, and Identity Science, 1(1), 32-41.

Dantcheva, A., Chen, C., & Ross, A. (2012). Can facial cosmetics affect the matching accuracy of face recognition systems? 2012 IEEE Fifth International Conference on Biometrics: Theory, Applications and Systems (BTAS), (pp. 391-398). IEEE. https://ieeexplore.ieee.org/document/6374605/

Dobrišek, S., Gajšek, R., Mihelič, F., Pavešić, N., & Štruc, V. (2013). Towards efficient multi-modal emotion recognition. International Journal of Advanced Robotic Systems, 10(1), 53. https://journals.sagepub.com/doi/10.5772/54002

Doerrfeld, B. (2015). 20+ Emotion recognition APIs that will leave you impressed, and concerned. Nordic APIs Blog. https://nordicapis.com/20-emotion-recognition-apis-that-will-leave-you-impressed-and-concerned/

Eckert, M. L., Kose, N., & Dugelay, J. L. (2013). Facial cosmetics database and impact analysis on automatic face recognition. 2013 IEEE 15th International Workshop on Multimedia Signal Processing (MMSP) (pp. 434-439). IEEE. https://ieeexplore.ieee.org/abstract/document/6659328

Garcia, M. (2016). Racist in the machine: the disturbing implications of algorithmic bias. World Policy Journal, 33(4), 111-117.

Guo, G., Wen, L., Yan, S. (2014). Face authentication with makeup changes. IEEE Transactions on Circuits and Systems for Video Technology, 24(5), 814-825.

Howard, A., Zhang, C., & Horvitz, E. (2017). Addressing bias in machine learning algorithms: a pilot study on emotion recognition for intelligent systems. 2017 IEEE Workshop on Advanced Robotics and Its Social Impacts (ARSO). 10.1109/ARSO.2017.8025197

IBM Diversity in Faces. Retrieved 4 September, 2019, from https://www.research.ibm.com/artificial-intelligence/trusted-ai/diversity-in-faces/

iBorderCtrl Project. Retrieved 4 September, 2019, from https://www.iborderctrl.eu.

Keyes, O. (2018). The misgendering machines: trans/HCI implications of automatic gender recognition. Proceedings of the ACM on Human-Computer Interaction, 2 (CSCW). https://doi.org/10.1145/3274357

Labeled Faces in the Wild. University of Massachusetts, Amherst. Retrieved 4 September, 2019, from http://vis-www.cs.umass.edu/lfw/

Learned-Miller, E., Ordóñez, V., Morgenstern, J., & Joy Buolamwini, J. (2020). Facial Recognition Technologies in the Wild. https://global-uploads.webflow.com/5e027ca188c99e3515b404b7/5ed1145952bc185203f3d009_FRTsFederalOfficeMay2020.pdf. Accessed 11 June 2020.

Liu, Z., Luo, P., Wang, X., & Tang, X. CelebFaces Attributes (CelebA) Dataset. Retrieved 4 September, 2019, from http://mmlab.ie.cuhk.edu.hk/projects/CelebA.html

Loegel, A., Courrèges, S., Morizot, F., & Fontayne, P. (2017). Makeup, an essential tool to manage social expectations surrounding femininity? Movement and Sports Sciences, 96 (2), 19-25. https://www.cairn.info/revue-movement-and-sport-sciences-2017-2-page-19.htm

Mahalingam, G., Ricanek, K., & Albert, A. M. (2014). Investigating the periocular-based face recognition across gender transformation. IEEE Transactions on Information Forensics and Security, 9(12), 2180-2192.

Melendez, S. (2018, August 9). Uber driver troubles raise concerns about transgender face recognition. Fast Company. Retrieved 24 July, 2019, from https://www.fastcompany.com/90216258/uber-face-recognition-tool-has-locked-out-some-transgender-drivers

National Institute of Standards and Technology (NIST). (2019). FRVT 1:N Identification. U.S. Department of Commerce. Retrieved 4 September, 2019, from https://www.nist.gov/programs-projects/frvt-1n-identification

Raji, I. D., & Buolamwini, J. (2019). Actionable auditing: investigating the impact of publicly naming biased performance results of commercial AI products. Proceedings of the AAAI/ACM Conference on Artificial Intelligence, Ethics, and Society. https://www.media.mit.edu/publications/actionable-auditing-investigating-the-impact-of-publicly-naming-biased-performance-results-of-commercial-ai-products/

Scalable measures for automated recognition technologies (SMART) project. CORDIS. Retrieved 4 September, 2019, from https://cordis.europa.eu/project/rcn/99234/factsheet/en

Scheuerman, M. K., Paul, J. M., & Brubaker, J. (2019). How computers see gender: an evaluation of gender classification in commercial facial analysis and image labeling services. In Proceedings of the ACM on Human-Computer Interaction, 3(CSCW). https://docs.wixstatic.com/ugd/eb2cd9_963fbde2284f4a72b33ea2ad295fa6d3.pdf

Surfshark. Retrieved 15 June, 2020, from https://surfshark.com/facial-recognition-map

VGGFace2. Retrieved 4 September, 2019, from http://www.robots.ox.ac.uk/~vgg/data/vgg_face2/

Zhao, J., Wang, T., Yatskar, M., Ordonez, V., & Chang, K. W. (2017). Men also like shopping: reducing gender bias amplification using corpus-level constraints. arXiv Preprint. https://arxiv.org/abs/1707.09457

Facial recognition systems can identify people in crowds, analyze emotion, and detect gender, age, race, sexual orientation, etc. These systems are often employed in recruitment, authorizing payments, security, surveillance and unlocking phones. Yet, these systems can also discriminate based on characteristics such as race and gender, and their intersections. This case study looks at ways to overcome this bias. 1. Gender. One research team found that when photographs depict a man in a kitchen, automated image captioning algorithms systematically misidentify the individual as a woman. Why? In part, because women are portrayed in cooking contexts 33% more frequently than men in training sets. The Fix: Reconfigure training data. 2. Intersection of Gender and Race. A well-known study, Gender Shades, measured the accuracy of commercial gender classification systems from Microsoft, IBM and Face++. It found that darker-skinned women were often misclassified. Several systems performed better on men’s faces than on women’s faces, and all systems performed better on lighter skin than darker skin. Error rates were 35% for darker-skinned women, 12% for darker-skinned men, 7% for lighter-skinned women, and less than 1% for lighter-skinned men. The Fix: The team developed and labeled a new intersectional dataset for four subgroups: darker-skinned women, darker-skinned men, lighter-skinned women, and lighter-skinned men. Their dataset consisted of 1270 images from three African countries (Rwanda, Senegal, and South Africa) and three European countries (Iceland, Finland, and Sweden). Using this new dataset, the commercial systems all improved accuracy.

4. Transgender. One emerging challenge is recognizing transgender faces, especially during transition periods. Gender-affirming hormone therapy can redistribute facial fat and change the overall shape and texture of the face. The Fix: In this case, it may be important to revise algorithmic parameters to rely on the eye (or periocular) region. But the trans* community warns that collecting data from an endangered community may not be the best practice. Facial recognition systems can perpetuate and even amplify patterns of injustice by consciously or unconsciously encoding human bias. Understanding underlying intersectional discrimination in society can help researchers develop more just and responsible technologies. Policy makers should ensure that biometric programs undergo thorough and transparent civil rights assessment prior to implementation.